Linky #17 - Learning by Doing

It’s conference season again, which means plenty of ideas, experiments, and conversations about how we build quality into our systems. This week’s picks explore how we learn best from hands-on experience, solid fundamentals, and small experiments. Whether we’re testing software, building resilience, or just trying to make sense of AI’s impact on our work.

Quality Engineering Newsletter is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Latest post from the Quality Engineering Newsletter

I’m deep into conference season, so expect a few reflections from the ones I’ve attended recently and a few more coming up.

Lean Agile Scotland (#LAScot) was all about working with complexity, from understanding how it triggers stress and how resilience helps us work with it, rather than against it.

TestBash (now MotaCon) continues the trend of shifting from testing to building quality in. Testing remains essential (it tells us what the current quality is), but the focus is moving toward how we act on that information.

And for those who want to dive deeper, here are my notes from the top three talks from TestBash: A Tester’s Role in Evaluating and Observing AI Systems by Carlos Kidman, Why Automation Is Holding Back Continuous Quality: Finding Balance in Modern Testing by Philippa Jennings and GenAI Wars Episode 3: Return of the Explorer by Martin Hynie.

Is there a topic or problem you’d like to hear about? Comment below or reply back to this email with your ideas.

Why AI isn’t what you think

A fascinating interview with Benedict Evans. I’ve been reading his work for years (his newsletter is always worth a follow). He has some of the most unique takes on the tech industry. His views on AI are no different. The whole interview Is worth a listen but some points that stood out for me:

The data advantage incumbents supposedly have is illusory, mainly because LLMs require vast amounts of generalised text, which is readily available, making data effectively a commodity.

ChatGPT has captured the brand position like Google did for search, but the underlying models are becoming commodities with no clear product differentiation.

Only 10% of people use AI daily, with another 15-20% weekly, while 20-30% tried it and didn’t get it, suggesting a major adoption gap despite free access, because people struggle to map AI capabilities to their actual tasks.

Writing is thinking, and delegating writing to AI means missing the chance to think clearly. Students using AI for homework avoid the mental work that builds reasoning skills.

If you’re short on time, FS Blog also summarises the key takeaways well: Benedict Evans: Why AI Isn’t What You Think

Summary of DORA 2025

Tl;dr

A long report that doesn’t really say much, apart from reinforcing what many (including myself) already suspected – AI amplifies strengths and weaknesses, and strong engineering practices are essential. Beyond that, there’s little that feels genuinely new or actionable.What stands out 👀

• AI is an amplifier: strong teams get stronger, weak ones see problems magnified.

• Engineering fundamentals matter: small batches, fast feedback, version control, good architecture and a learning culture are prerequisites. Without them, AI brings little benefit – sometimes harm.

• “The greatest returns on AI investment come not from the tools themselves, but from a strategic focus on the underlying organisational system”. Like I recently posted! Maybe it’s not the tech at all!What’s less clear ☁️

• Vague on benefits: claims results are “better” than last year, but shifts from % changes to abstract effect sizes – not apples-to-apples, hard to judge progress.

• Vague on foundations: the seven “foundational practices” it advocates for are described at a very high level level, very little on detail could take away and action.

• Reliant on self-reports: evidence is surveys and case studies, not delivery metrics. Self-reporting tends to overstate benefits (as METR showed), an ongoing watch out with DORA studies.

It’s nice to see that the fundamentals of good engineering practice still matter, even in our current AI world. I did wonder whether all this focus on quality engineering might be negated because we can now build and test faster, but so far that doesn’t seem to be the case.

AI amplifies whatever you’re already doing. If your teams work smarter, it helps you scale that. But if you’re working harder (cutting corners or skipping steps) it amplifies that too. Via DORA Report on AI in Software Development: Amplifying Strengths and Weaknesses | Rob Bowley posted on the topic | LinkedIn

Quality Engineering Newsletter is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

How do we create more experiential learning in software engineering teams?

Japanese nurses are being asked to wear suits that mimic physical decline.

They come with:

• Weighted limbs to imitate frailty

• Restricted movement to replicate stiffness

• Vision-distorting glasses to simulate cataractsSpend 10 minutes in one of these suits and you don’t need a module on empathy for the elderly.

The impact isn’t more knowledgeable nurses.

But ones who are more patient, empathetic, and effective.

It’s a masterclass in experiential learning. If you want people to really understand something, don’t lecture them. Let them experience it first-hand.

Because if you want people to know something, tell them the facts.

But if you want them to change, give them an experience.

This is a brilliant example of experiential learning, the kind that changes behaviour, not just knowledge.

So what’s the equivalent for software teams? Perhaps game days or chaos engineering exercises, where you simulate outages or remove critical infrastructure and let teams work through recovery. We can learn from others’ failures, but we learn best from our own. Via LinkedIn | Matt Furness

You got to go slow to go fast

Anyone can move fast. That’s the trap. Speed is cheap, but the ability to be fast without being reckless is expensive.

The chess master’s lightning moves come from decades of slow study. The CEO who is in the weeds knows where the problems lurk.

Details don’t slow you down; they speed you up.

It’s exactly like building software. You have to go slow at the start, to understand, to design, to build quality in, before you can truly go fast. Via The Right Time to Read

Only read what interest you

“There is only one way to read, which is to browse in libraries and bookshops, picking up books that attract you, reading only those, dropping them when they bore you, skipping the parts that drag – and never, never reading anything because you feel you ought, or because it is part of a trend or a movement. Remember that the book which bores you when you are twenty or thirty will open doors for you when you are forty or fifty-and vice versa. Don’t read a book out of its right time for you.”

This reminded me of a recent chat where someone was struggling to keep up with everything they wanted to read. I used to feel the same, but now I just focus on what catches my interest. If I don’t get to it, that’s fine. If it’s a good idea, it’ll come around again. Via The Right Time to Read

Don’t outsource your value judgement to an AI

Vernon Richards shared the Vaughn Tan Rule:

Do NOT outsource your subjective value judgments to an AI, unless you have a good reason to, in which case make sure the reason is explicitly stated.

He breaks this down beautifully in the context of testing. For me, it captures what LLMs are really good at: helping you do what you already know how to do, faster.

But if you use them to do something you can’t evaluate yourself, your ability to judge their output disappears. That’s when you risk outsourcing your value.

If AI can do it and you can’t tell if it’s any good... what do we need you for?

Vernon has some great takes on quality I’d highly recommend subscribing What Is The Vaughn Tan Rule and How Does It Impact Testing?

Being “technical” isn’t enough

You have to be good at the technical work first, whatever that means for you. It’s the thing you were hired for and this has to be your first priority. And the better you are, the further this can take you. You write better code, or better reports, or better designs, and people notice. That’s enough for a while.

But eventually it’s not. Everyone around you is technically strong too. So for most of us, you won’t stand out anymore. You need to increase your impact in other ways.

The biggest gains come from combining disciplines. There are four that show up everywhere: technical skill, product thinking, project execution, and people skills. And the more senior you get, the more you’re expected to contribute to each.

Technical skill is your chosen craft. Product thinking is knowing what’s worth doing. Project execution is making sure it happens. People skills are how you work with and influence others.

I really like how Josh frames this. “Technical” so often means coding, but it’s far broader than that.

These four disciplines are vital for quality engineers too. Knowing where to apply your skills, how to make things happen, and how to bring others with you. I’ve developed these by being curious about other disciplines and learning how they work, which is why I attend such a range of conferences, not just testing ones. Via Being good isn’t enough | people, ideas, machines

You don’t need a 10-year plan. You need to experiment.

This video by Anne-Laure Le Cunff is genuinely fascinating. She argues that instead of sticking to rigid, long-term plans, we should embrace experimentation: running small, deliberate tests to learn and adapt.

A key idea she shares is the power of choice between stimulus and response. Our reactions are often automatic, but by identifying and labelling our emotions, we shift from emotional to rational responses. Tools like How We Feel can help with this, by giving language to what we experience.

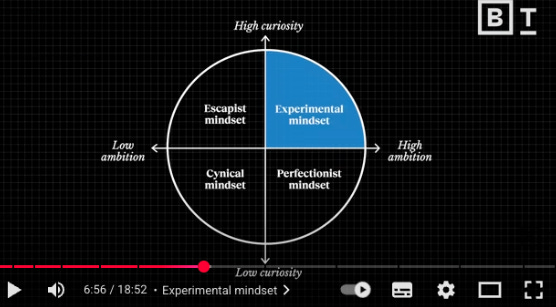

She also introduces a 2×2 matrix on curiosity and ambition. The goal is to operate in the quadrant of high curiosity and high ambition. What she calls the experimental mindset. That’s where progress and learning happen.

The experimental mindset is about observing, asking questions, and running small experiments to discover what works. It’s an iterative, uncertainty-friendly way to approach both work and life and exactly the kind of thinking we need in quality engineering.

I’ve been enjoying these video by Think Big, well worth a subscribe via You don’t need a 10-year plan. You need to experiment. | Anne-Laure Le Cunff - YouTube

Root Cause Analysis: beyond the 5 Whys

My summary of XPManchester meet that I went to this week:

The topic? Root Cause Analysis: beyond the 5 Whys.

When I first saw the title, I assumed it would be another talk about how Root Cause Anlysis (RCA) and 5 Whys are useful for breaking down problems. But I’ve always felt that while RCA and 5 Whys can help with straightforward issues, they fall short for complex system failures.

The problem is that simple RCA often follows our existing perceptions. Once we hit the “problem” we think it is, we stop digging. The process can even drift into blaming rather than understanding.

This session took a different direction! instead it explored Goldratt’s Current Reality Trees, a tool from his book It’s Not Luck. It’s a fascinating way to map complex systems and reveal cause-and-effect relationships that aren’t always linear.

It was a great session, and if you don’t, I’d highly recommend meet-ups outside of your discipline. Great way to learn how they work, and if you’re lucky, there is usually free pizza and drinks. Sadly, not any XPManchester, but it was still a good way to spend a Thursday evening. Via Just been to my 18th meetup this year! This time it was XP Manchester | Jitesh Gosai

Closing thought

What ties all of these together for me is that good engineering and good learning rarely come from speed or certainty. They come from curiosity, experimentation, and experience.

Whether it’s observing AI systems, slowing down to build quality in, or learning through failure, the goal is the same: to keep discovering what works and to do more of that.