TestBash Brighton 2025: Reflections on Two Days of Quality

This week I was back at TestBash Brighton - one of my favourite conferences to attend and speak at. There’s something about the Ministry of Testing (MoT) community that makes it stand apart from other events. It’s not just about great talks (though there were plenty of those). It’s the conversations in the breaks, the questions from the audience, and the way everyone comes together around a shared purpose.

TestBash has always felt community-first rather than conference-first, and you can feel it in the atmosphere. That’s why I think it's probably one of the best conferences for testers, quality engineers, and anyone who cares about building better software.

This year’s programme didn’t have an official theme, but a strong one emerged anyway: quality everywhere. Talks weren’t just about testing techniques or tools, they explored leadership, careers, AI, resilience, and systems thinking. It reflected the shift I’ve seen over the last few years: from focusing narrowly on “testing” to embracing a holistic view of quality.

Here are a few of my highlights.

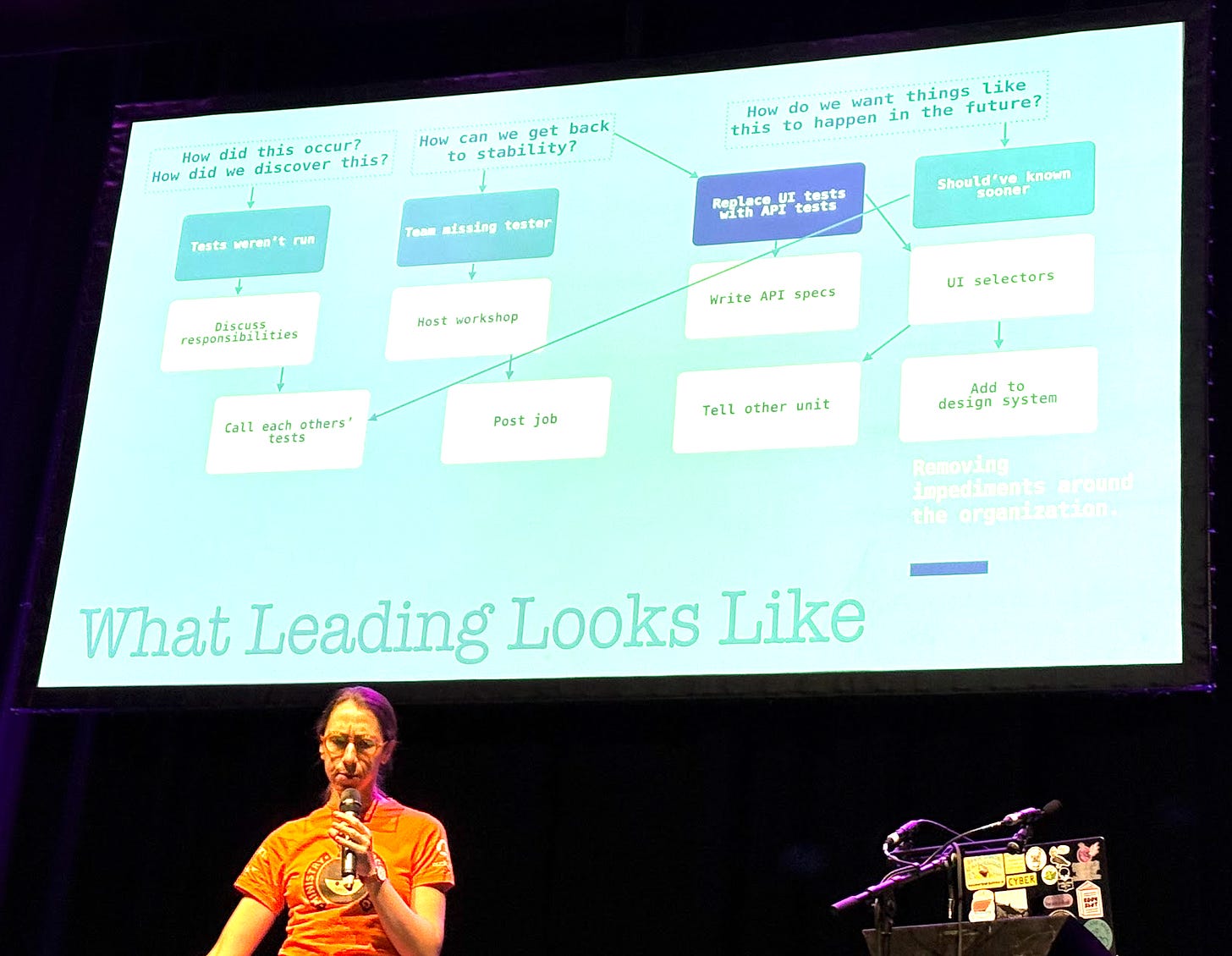

A Day in the Life of a Quality Lead by Elizabeth Zagroba

Elizabeth told the story of her journey from senior tester to quietly leading. What struck me most was her mindset of always trying to “work herself out of a job”. She’d clear away the tasks that used to keep her busy, only to discover new areas where she could add value.

It’s a powerful reminder that developing in your career doesn’t always mean moving into management. You can grow as an individual contributor by unblocking teams, enabling others, and making yourself less of a bottleneck. Far from making yourself redundant, you open up space to deliver greater value.

Elizabeth’s story showed how leadership is often about influence and systems thinking rather than titles.

Navigating a Career in Quality Engineering by Ben Dowen

Ben’s talk paired beautifully with Elizabeth’s. Where she spoke about moving up the stack, he focused on managing your career more deliberately.

He talked about the importance of feedback loops, not just receiving feedback, but capturing the impact of your work so you can show the value you bring. This wasn’t about chasing recognition, but about using evidence to shape the work you do, align it with what matters to you, and build a network that helps you do more of the work you enjoy.

I found it a really valuable perspective, especially for those looking for whats next in their careers. It’s not just about having a job, it’s about crafting a career path with intention.

💡 If you’re enjoying these reflections, I share posts like this regularly in my Quality Engineering newsletter. It’s a mix of conference takeaways, practical ideas, and deeper dives into how we can create and sustain quality in complex systems. Subscribe if you’d like to keep these conversations coming straight to your inbox.

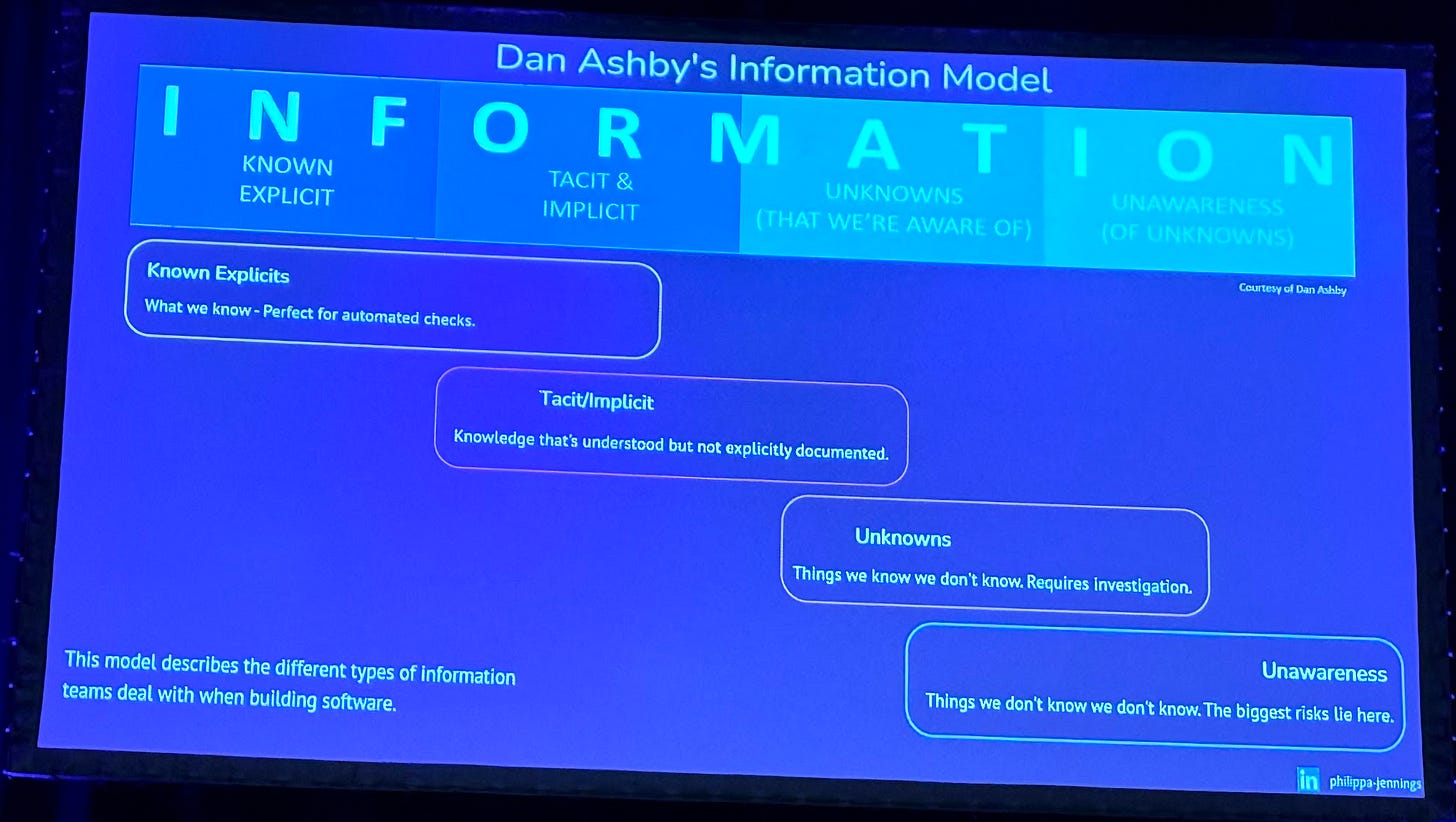

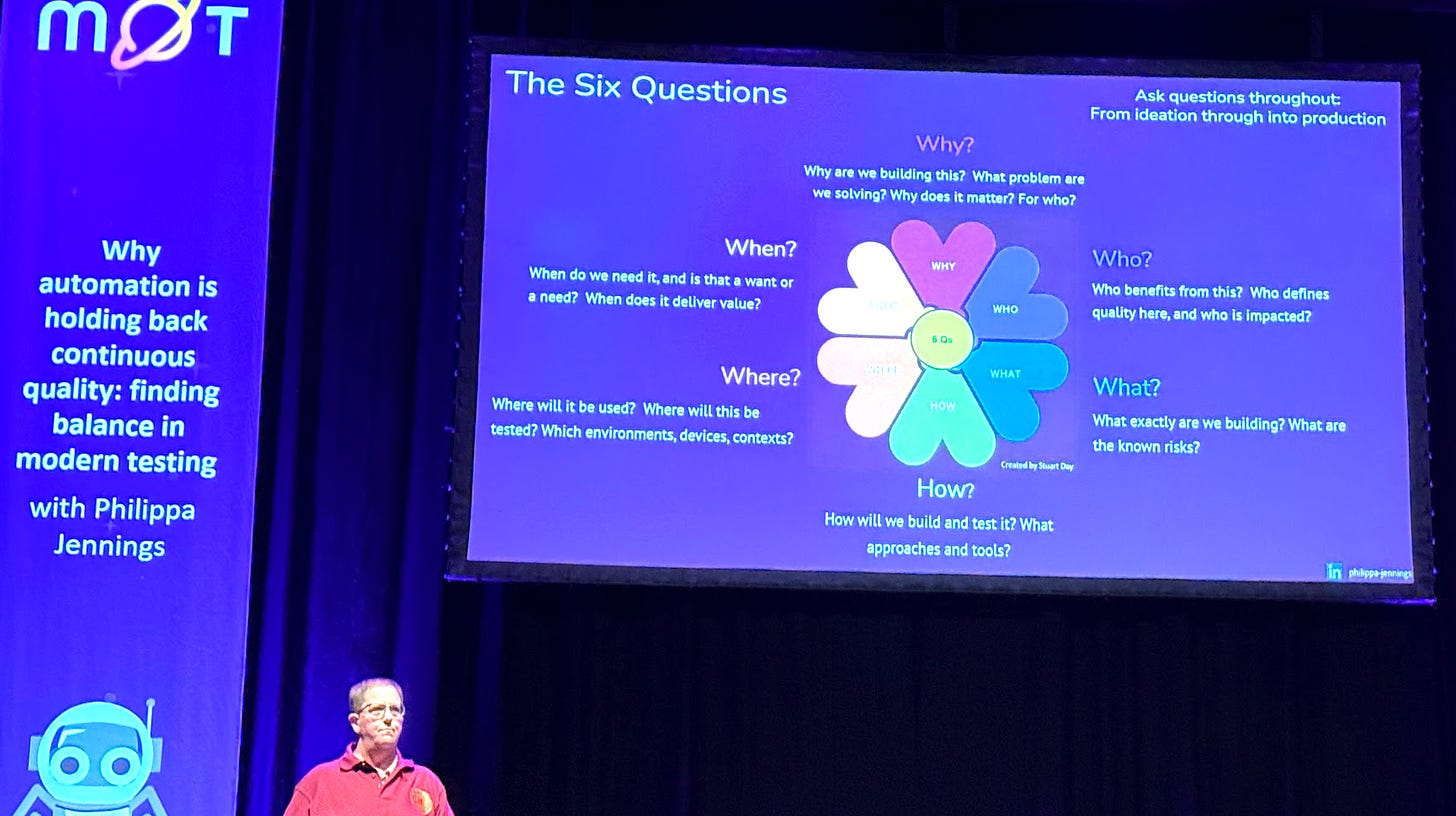

Why Automation Is Holding Back Continuous Quality by Philippa Jennings

Philippa tackled the tricky question of whether we should “automate everything”. Philippa's view was that aiming for 100% automation can actually do more harm than good, as you can end up focusing more on the automation itself than on the problems you’re trying to solve.

Philippa drew on Dan Ashby’s information framework (which reminded me of my Testing Unknown Unknowns matrix and Stuart Day’s six questions to show how we can think more clearly about what we know, what we don’t know, and what’s worth automating. By combining the two, she gave us a simple but powerful way to make implicit information explicit and then decide where automation really adds value.

It was a refreshing take that moves the conversation away from chasing numbers towards making smarter, context-driven choices.

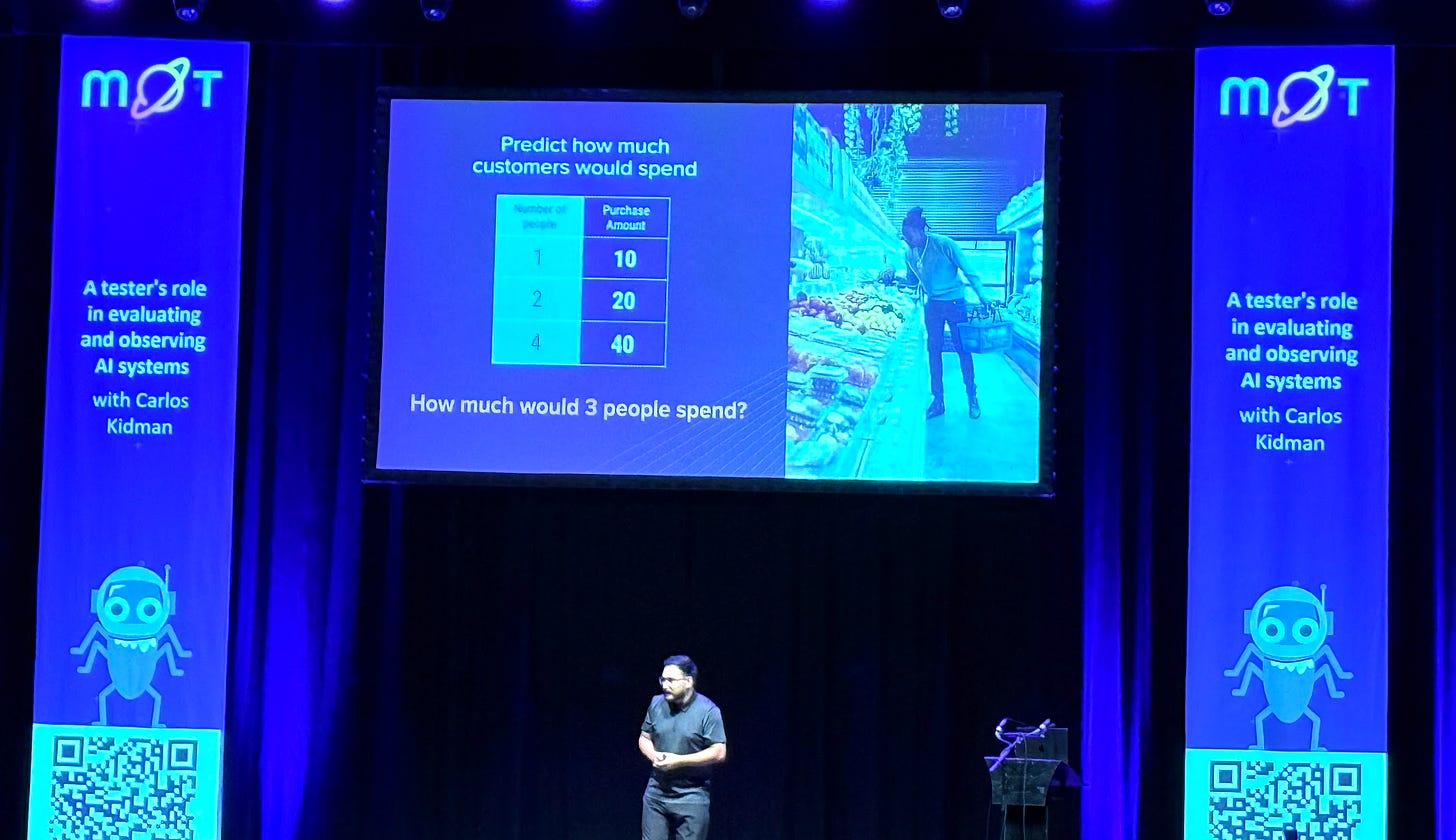

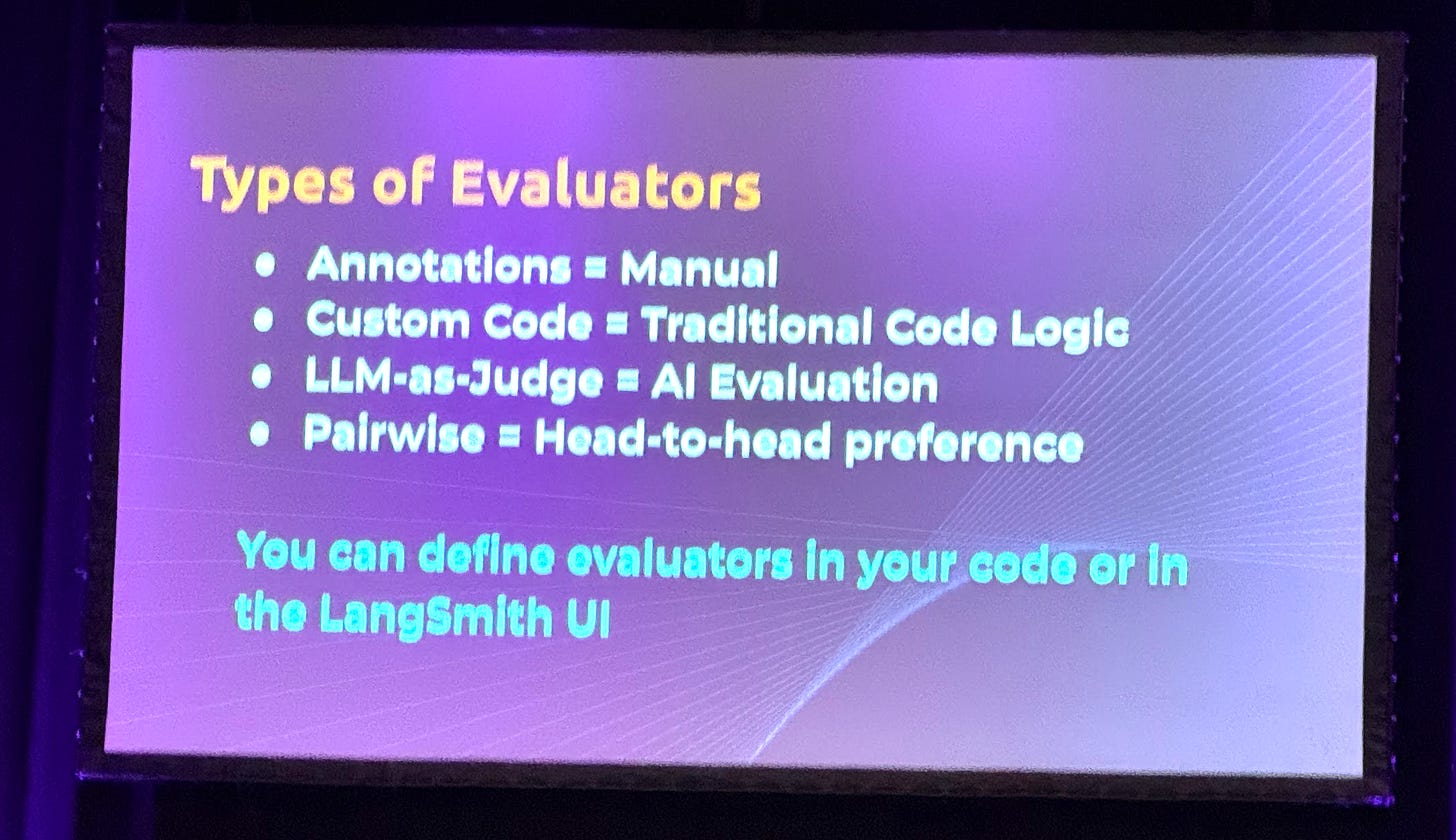

A Tester’s Role in Evaluating and Observing AI Systems by Carlos Kidman

AI is everywhere right now, and Carlos gave one of the most practical sessions I’ve seen on how testers can actually engage with it. He shared evaluation tools and methods that help us understand where uncertainty lies in AI systems and what quality attributes matter most.

What I loved was his point that you don’t need to be an AI expert to get involved. Testers and quality engineers bring something unique: the ability to ask the right questions, identify attributes worth measuring, and build systematic ways to assess whether each iteration of an AI model is improving or regressing.

This kind of thinking is going to be crucial as AI systems become more complex and more embedded in our products.

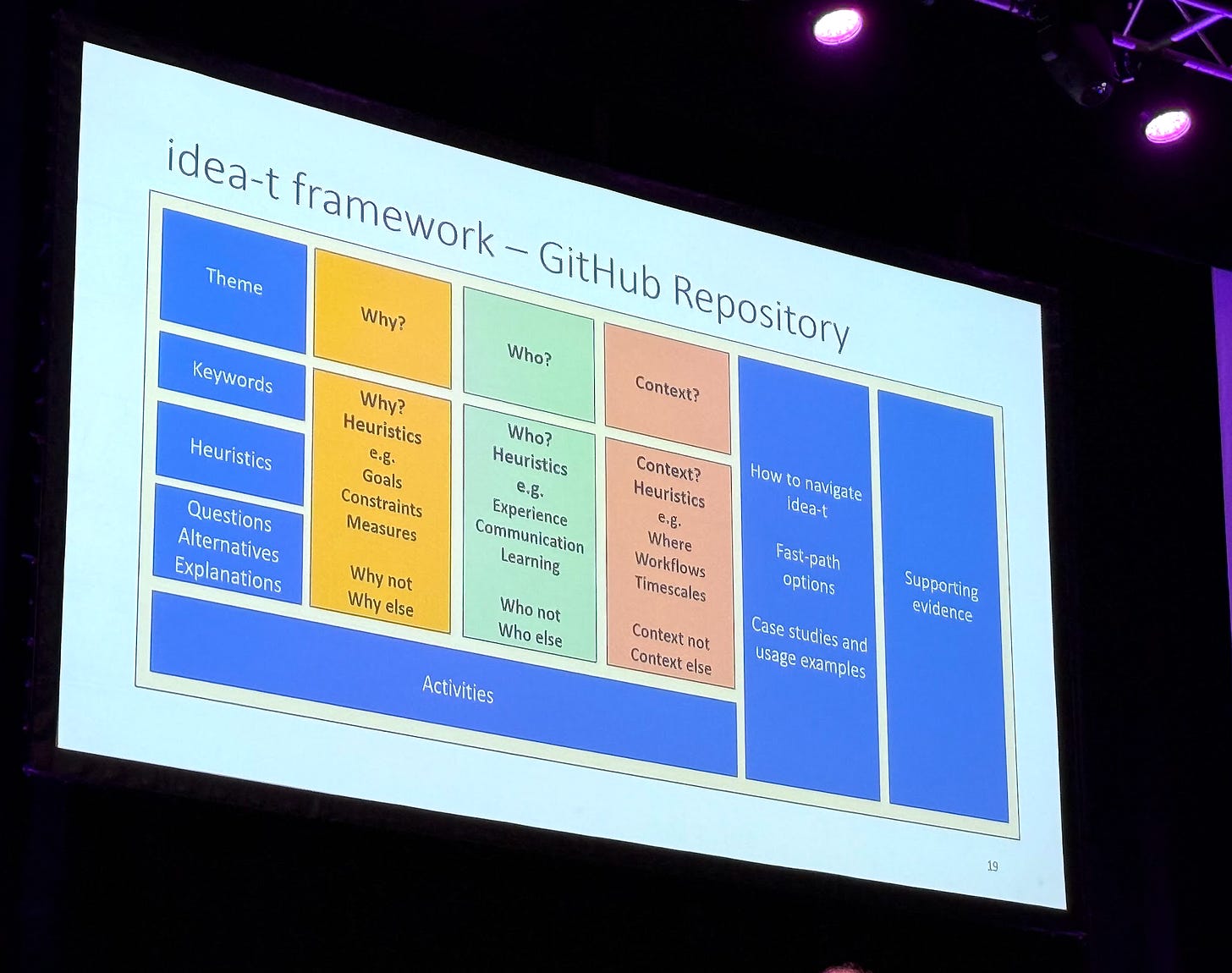

Will a New Tool Help? Here’s an IDEA-T to Think About by Isabel Evans

Isabel tackled a challenge we’ve all faced: deciding whether a shiny new tool is worth it. She introduced her IDEA-T framework, a set of heuristics to guide those decisions.

What stood out was how practical it felt. Instead of being swayed by flashy UIs or vendor promises, the framework encourages you to step back and ask: what do we really need? Should we build, buy, or adapt something? And how will we measure success?

It’s a framework I can see being useful not only for external tools but also when building internal ones. A really thoughtful contribution to an area that often gets overlooked.

How Quality Is Created, Maintained and Lost in Complex Software Systems by my talk

I was really excited to contribute to the programme this year with my talk on the July 2024 CrowdStrike outage. It was one of the most impactful software incidents in recent memory, and I used it as a case study to explore how quality can be lost in complex systems.

I approached it from two angles. On the micro level, I looked at CrowdStrike’s immediate response and why traditional root cause analysis often fails in these situations. On the macro level, I explored how we can learn from incidents like this to design more resilient systems and mitigate widespread impact.

The key message: catastrophic failures in complex ecosystems may be inevitable, but we can reduce their severity by studying them carefully and integrating those lessons into our quality engineering practices.

Expect a full write-up very soon!

Delivering this talk at TestBash was a great experience. The questions and discussions that followed showed just how much appetite there is in our community for thinking about resilience and systems-level quality.

My Reflections

Looking back across these talks, a few threads tied them together for me:

Leadership without titles: Elizabeth, Ben, and Barry (who spoke brilliantly on leading change as an individual contributor) all highlighted how influence matters more than job titles.

AI and emergent systems: Carlos and Martin Hynie (whose session on “GenAI Wars” was fascinating) pushed us to think about how we’ll test, observe, and control systems whose behaviour we can’t fully predict.

Frameworks and heuristics: Isabel and Philippa Jennings (on automation) reminded us that good decision-making tools can help us avoid chasing trends and instead focus on high-value work.

Resilience and response: My talk and others underlined that the true test of quality isn’t just when things work, but how we respond when they don’t.

What became clear is that quality engineering isn’t just about testing earlier or automating more. It’s about helping teams reduce uncertainty, build quality in, and prepare for the unexpected. Our systems will always surprise us, especially as AI drives more emergent behaviour, and our job is to make sure we can understand, respond, and adapt when they do.

Closing Thoughts

This year’s TestBash felt like a turning point. The focus has shifted from testing as an isolated activity to quality as an organisational capability. That doesn’t mean testing isn’t important, it is. But it’s one piece of a much bigger puzzle.

I left Brighton energised, with new ideas to take back to my work and a renewed appreciation for this community. A huge thank you to the Ministry of Testing team for curating such a rich programme, and to the speakers and attendees for the conversations that made it so engaging.

If you were there, I’d love to hear your highlights. If not, what do you think about this shift from testing to quality? How are you seeing it play out in your work?