Ongoing Tradeoffs, and Incidents as Landmarks

One of the really valuable things you can get out of in-depth incident investigations is a better understanding of how work is actually done, as opposed to how we think work is done, or how it is specified. A solid approach to do this is to get people back into what things felt like at the time, and interview them about their experience to know what they were looking for, what was challenging. By taking a close look at how people deal with exceptional situations and how they translate goals into actions you also get to learn a lot about what's really important in normal times.

Incidents disrupt. They do so in undeniable ways that more or less force organizations to look inwards and question themselves. The disruption is why they are good opportunities to study and change how we do things.

In daily work, we'll tend to frame things in terms of decisions: do I ship now or test more? Do I go at it slow to really learn how this works or do I try and get AI to slam through it and figure it out in more depth later? Do we cut scope or move the delivery date? Do I slow down my own work to speed up a peer who needs some help? Is this fast enough? Should I argue in favor of an optimization phase? Do I fix the flappy test from another team or rerun it and move on? Do I address the low urgency alert now even though it will create a major emergency later, or address the minor emergency already in front of me? As we look back into our incidents and construct explanations, we can shed more light on what goes on and what's important.

In this post, I want to argue in favor of an additional perspective, centered considering incidents to be landmarks useful to orient yourself in a tradeoff space.

From Decisions to Continuous Tradeoffs

Once you look past mechanical failures and seek to highlight the challenges of normal work, you start to seek ways to make situations clearer, not just to prevent undesirable outcomes, but to make it easier to reach good ones too.

Over time, you may think that decisions get better or worse, or that some types shift and drift as you study an ever-evolving set of incidents. There are trends, patterns. It will feel like a moving target, where some things that were always fine start being a problem. Sometimes it will seem that external pressures, outside of any employee's control, create challenges that seem to emerge from situations related to previous ones, which all make incidents increasingly feel like natural consequences of having to make choices.

Put another way, you can see incidents as collections of events in which decisions happen. Within that perspective, learning from them means hoping for participants to get better at dealing with the ambiguity and making future decisions better. But rather than being collections of events in which decisions happen, it's worthwhile to instead consider incidents as windows letting you look at continuous tradeoffs.

By continuous tradeoffs, I mean something similar to this bit of an article Dr. Laura Maguire and I co-authored titled Navigating Tradeoffs in Software Failures:

Tradeoffs During Incidents Are Continuations of Past Tradeoffs

Multiple answers hinted at the incident being an outcome of existing patterns within the organization where they had happened, where communication or information flow may be incomplete or limited. Specifically, the ability of specific higher-ranking contributors who can routinely cross-pollinate siloed organizations is called as useful for such situations [...]

[...]

The ways similar tradeoffs were handled outside of incidents are revisited during the incidents. Ongoing events provide new information that wasn’t available before, and the informational boundaries that were in place before the outage became temporarily suspended to repair shared context.

A key point in this quote is that what happens before, during, and after an incident can all be projected as being part of the same problem space, but with varying amounts of information and uncertainty weighing on the organization. There are also goals, values, priorities, and all sorts of needs and limitations being balanced against each other.

When you set up your organization to ship software and run it, you do it in response to and in anticipation of these pressure gradients. You don’t want to move slow with full consensus on all decisions. You don’t want everyone to need to know what everybody else is doing. Maybe your system is big enough you couldn’t anyway. You adopt an organizational structure, processes, and select what information gets transmitted and how across the organization so people get what they need to do what is required. You give some people more control of the roadmap than others, you are willing to pay for some tools and not others, you will slow down for some fixes but live with other imperfections, you will hire or promote for some teams before others, you will set deadlines and push for some practices and discourage others, because as an organization, you think this makes you more effective and competitive.

When there’s a big incident happening and you find out you need half a dozen teams to fix things, what you see is a sudden shift in priorities. Normal work is suspended. Normal organizational structure is suspended. Normal communication patterns are suspended. Break glass situations mean you dust off irregular processes and expedite things you wouldn’t otherwise, on schedules you wouldn’t usually agree to.

In the perspective of decisions, it's possible the bad system behavior gets attributed to suboptimal choices, and we'll know better in the future through our learning now that we've shaken up our structure for the incident. In the aftermath, people keep suspending regular work to investigate what happened, share lessons, and mess with the roadmap with action items outside of the regular process. Then you more or less go back to normal, but with new knowledge and follow-up items.

Acting on decisions creates a sort of focus on how people handle the situations. Looking at incidents like they're part of a continuous tradeoff space lets you focus on how context gives rise to the situations.

In this framing, the various goals, values, priorities, and pressures are constantly being communicated and balanced against each other, and create an environment that shapes what solutions and approaches we think are worth pursuing or ignoring. Incidents are new information. The need to temporarily re-structure the organization is a clue that your "steady state" (even if this term doesn't really apply) isn't perfect.

Likewise, in a perspective of continuous tradeoffs, it's also possible and now easier for the "bad" system behavior to be a normal outcome of how we've structured our organization.

The type of prioritizations, configurations, and strategic moves you make mean that some types of incidents are more likely than others. Choosing to build a multi-tenant system saves money from shared resources but reduces isolation between workload types, such that one customer can disrupt others. Going multi-cloud prevents some outages but comes with a tax in terms of having to develop or integrate services that you could just build around a single provider. Keeping your infrastructure team split from your product org and never talking to sales means they may not know about major shifts in workloads that might come soon (like a big marketing campaign, a planned influx of new heavy users, or new features that are more expensive to run) and will stress their reactive capacity and make work more interrupt-driven.

Reacting to incidents by patching things up and moving on might bring us back to business as usual, but it does not necessarily question whether we're on the right trajectory.

Incidents as Navigational Landmarks

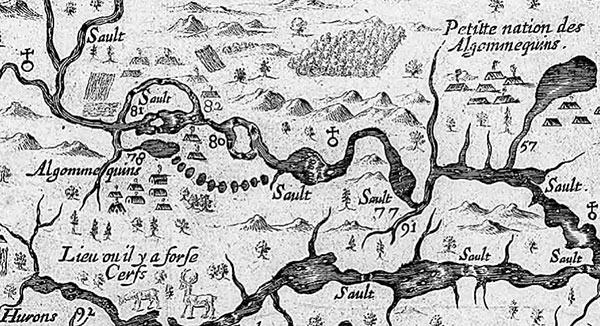

Think of old explorer maps, or even treasure maps: they are likely inaccurate, full of unspecified areas, and focused mainly on features that would let someone else figure out how to get around. The key markers on them would be forks in some roads or waterways, and landmarks.

If you were to find yourself navigating with a map like this, the way you'd know you were heading the right direction is by confirming your position by finding landmarks or elements matching your itinerary, or knowing you're actually not on the right path at all by noticing features that aren't where you expect them or not there at all: you may have missed a turn if you suddenly encounter a ravine that wasn't on your planned path, or not until you had first seen a river.

The analogy I want to introduce is to think of the largely unpredictable solution space of tradeoffs as the poorly mapped territory, and of incidents as potential landmarks when finding your way. They let you know if you're going in a desired general direction, but also if you're entirely in the wrong spot compared to where you wanted to be. You always keep looking for them; on top of being point-in-time feedback mechanisms when they surprise you, they're also precious ongoing signals in an imprecise world.

Making tradeoffs implies that there are types of incidents you expect to see happening, and some you don't.

If you decide to ship prototypes earlier to validate their market fit, before having fully analyzed usage patterns and prepared scaling work, then getting complaints from your biggest customers trying them and causing slowdowns is actually in line with your priorities. That should be a plausible outcome. If you decide to have a team ignore your usual design process (say, RFCs or ADRs that make sure it integrates with the rest of the system well) in order to ship faster, then you should be ready for issues arising from clashes there. If you emphasize following procedures and runbooks, you might expect documented cases to be easier to handle but the truly surprising ones to be relatively more challenging and disruptive since you did not train as much for coping with unknown situations.

All these elements might come to a head when a multitenant system gets heavy usage from a large customer trying out a new feature developed in isolation (and without runbooks), which then impacts other parts of the system, devolving into a broader outage while your team struggles to figure out how to respond. This juncture could be considered to be a perfect storm as much as it could be framed as a powder keg—which one we get is often decided based on the amount of information available (and acted on) at the time, with some significant influence from hindsight.

You can't be everywhere all at once in the tradeoff space, and you can't prevent all types of incidents all at once. Robustness in some places create weaknesses in others. Adaptation lets you reconfigure as you go, but fostering that capacity to adapt requires anticipation and the means to do so.

Either the incidents and their internal dynamics are a confirmation of the path you've chosen and it's acceptable (even if regrettable), or it's a path you don't want to be on and you need to keep that in mind going forward.

Incidents as landmarks is one of the tools that lets you notice and evaluate whether you need to change priorities, or put your thumb on the scale another way. You can suspect that the position you’re in was an outcome of these priorities. You might want to correct not just your current position, but your overall navigational strategy. Note that an absence of incidents doesn't mean you’re doing well, just that there are no visible landmarks for now; if you still seek a landmark, maybe near misses and other indirect signs might help.

But to know how to orient yourself, you need more than local and narrow perspectives to what happened.

If your post-incident processes purely focus on technical elements and response, then they may structurally locate responsibility on technical elements and responders. The incidents as landmarks stance demands that your people setting strategy do not consider themselves to be outside of the incident space, but instead see themselves as indirect but relevant participants. We're not looking to shift accountability away, but to broaden our definition of what the system is.

You want to give them the opportunity to continually have the pressure gradients behind goal conflicts and their related adaptations in scope for incident reviews.

One thing to be careful about here is that to find the landmarks and make them visible, you need to go beyond the surface of the incident. The best structures to look for are going to be stable; forests are better than trees, but geological features are even better.

What you'll want to do is keep looking for second stories, elements that do not simply explain a specific failure, but also influence every day successes. They're elements that incidents give you opportunities to investigate, but that are in play all the time. They shape the work by their own existence, and they become the terrain that can both constrain and improve how your people make things happen.

When identifying contributing factors, it's often factors present whether things are going well or not that can be useful in letting you navigate tradeoff spaces.

What does orientation look like? Once you have identified some of these factors that has systemic impact, then you should expect the related intervention (if any is required because you think the tradeoff should not be the same going forward) to also be at a system level.

Are you going to find ways to influence habits, tweak system feedback mechanisms, clarify goal conflicts, shift pressures or change capacity? Then maybe the landmarks are used for reorienting your org. But if the interventions get re-localized down to the same responders or as new pressures added on top of old ones (making things more complex to handle, rather than clarifying them), there are chances you are letting landmarks pass you by.

The Risks of Pushing for This Approach

The idea of using incidents as navigational landmarks can make sense if you like framing the organization as its own organism, a form of distributed cognition that makes its way through its ecosystem with varying amounts of self-awareness. There's a large distance between that abstract concept, and you, as an individual, running an investigation and writing a report, where even taking the time to investigate is subject to the same pressures and constraints as the rest of normal work.

As Richard Cook pointed out, the concept of human error can be considered useful for organizations looking to shield themselves from the liabilities of an incident: if someone can be blamed for events, then the organization does not need to change what it normally does. By finding a culprit, blame and human error act like a lightning rod that safely diverts consequences from the org’s structure itself.

In organizations where this happens, trying to openly question broad priorities and goal conflicts can mark you as a threat to these defence mechanisms. Post-incident processes are places where power dynamics are often in play and articulate themselves.

If you are to use incidents as landmarks, do it the way you would for any other incident investigation: frame all participants (including upper management) to be people trying to do a good job in a challenging world, maintain blame awareness, try to find how the choices made sense at the time, let people tell their stories, seek to learn before fixing, and don’t overload people with theory.

Maintaining the trust the people in your organization give you is your main priority in the long term, and sometimes, letting go of some learnings today to protect your ability to keep doing more later is the best decision to make.

Beyond personal risk, being able to establish incidents as landmarks and using them to steer an organization means that your findings become part of how priorities and goals are set and established. People may have vested interests in you not changing things that currently advantage them, or may try to co-opt your process and push for their own agendas. The incidents chosen for investigations and the type of observations allowed or emphasized by the organization will be of interest. Your work is also part of the landscape.