Mapping Feedback Across Uncertainty

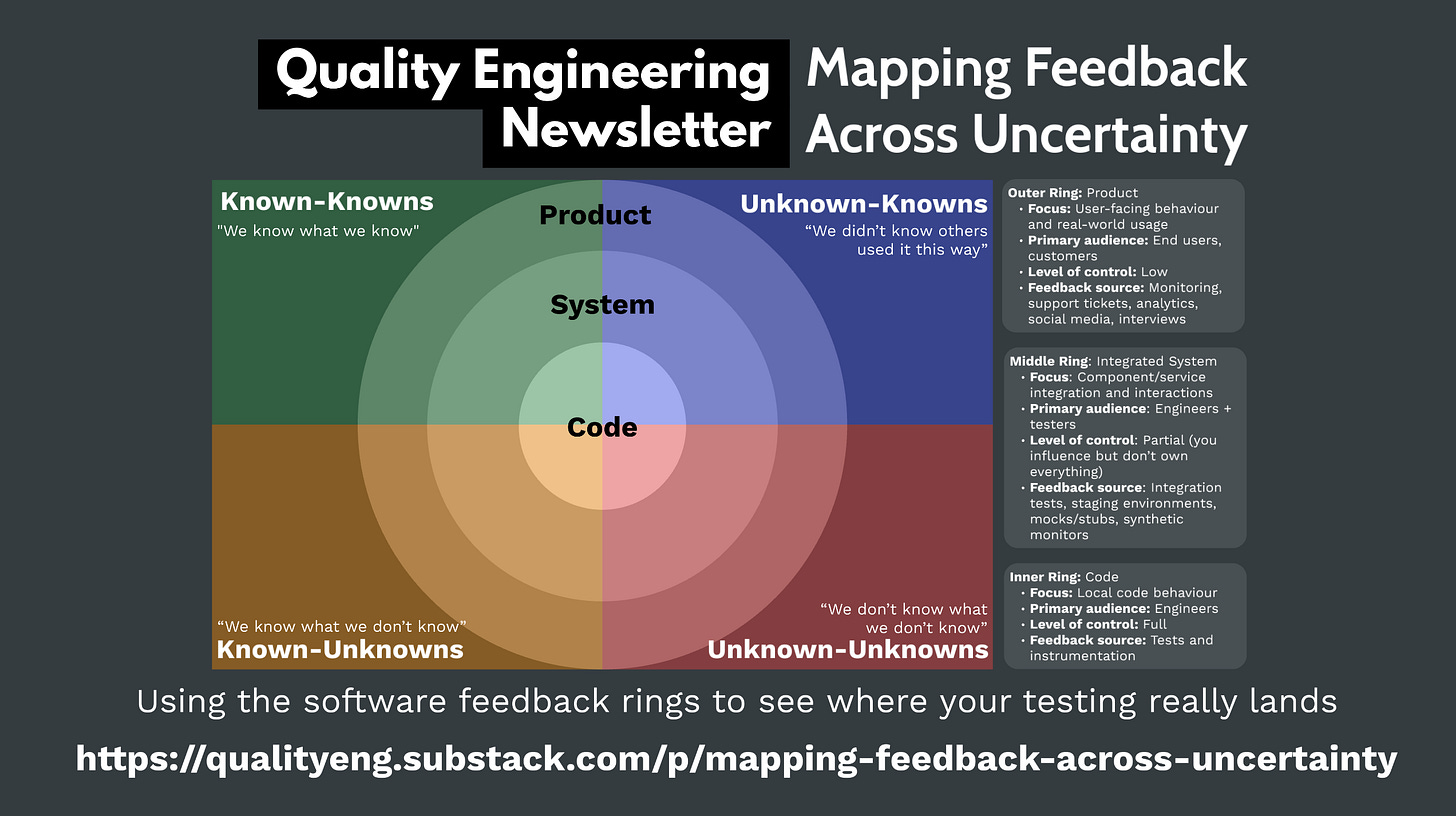

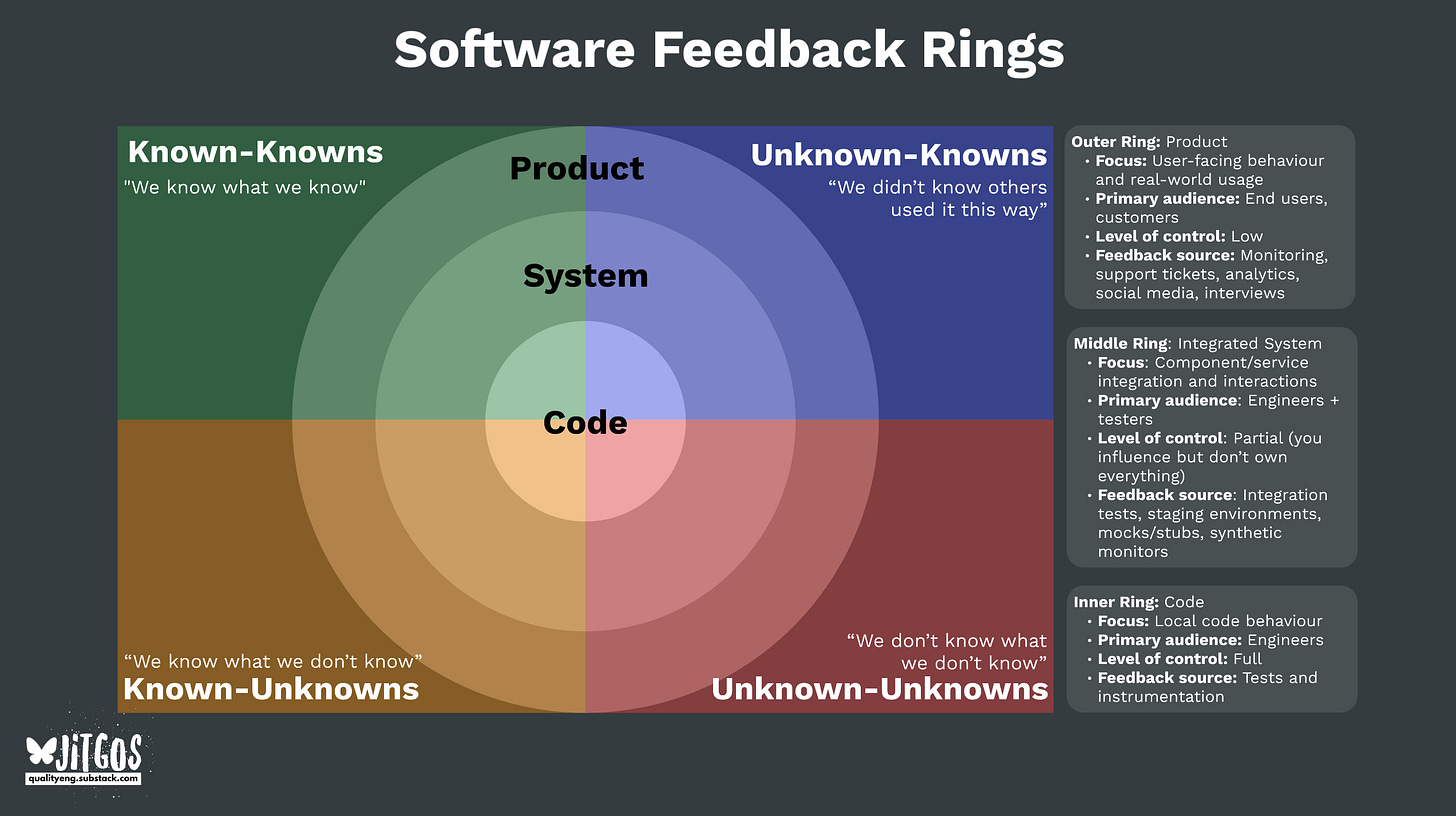

Testing isn’t just about catching bugs, it’s about lowering uncertainty by providing information through feedback. The challenge is that not all feedback is equal. Some tell you what you already knew, some expose risks, and some uncover things you’d never expected. This is where the software feedback rings come in.

I recently shared a new framework called The Software Feedback Rings, which was heavily influenced by Nichola Sedgwick’s Quality Radar. Check out that post to learn more, but the gist of it is that it helps teams think about how they are lowering their uncertainty at three different rings of code, system and product by framing each ring into The Testing Unknown-Unknowns quadrants.

In this post, I'd like to dive into what types of techniques help lower uncertainty at each of these quadrants and how that helps the team.

The Known-knowns quadrant

"We know what we know"

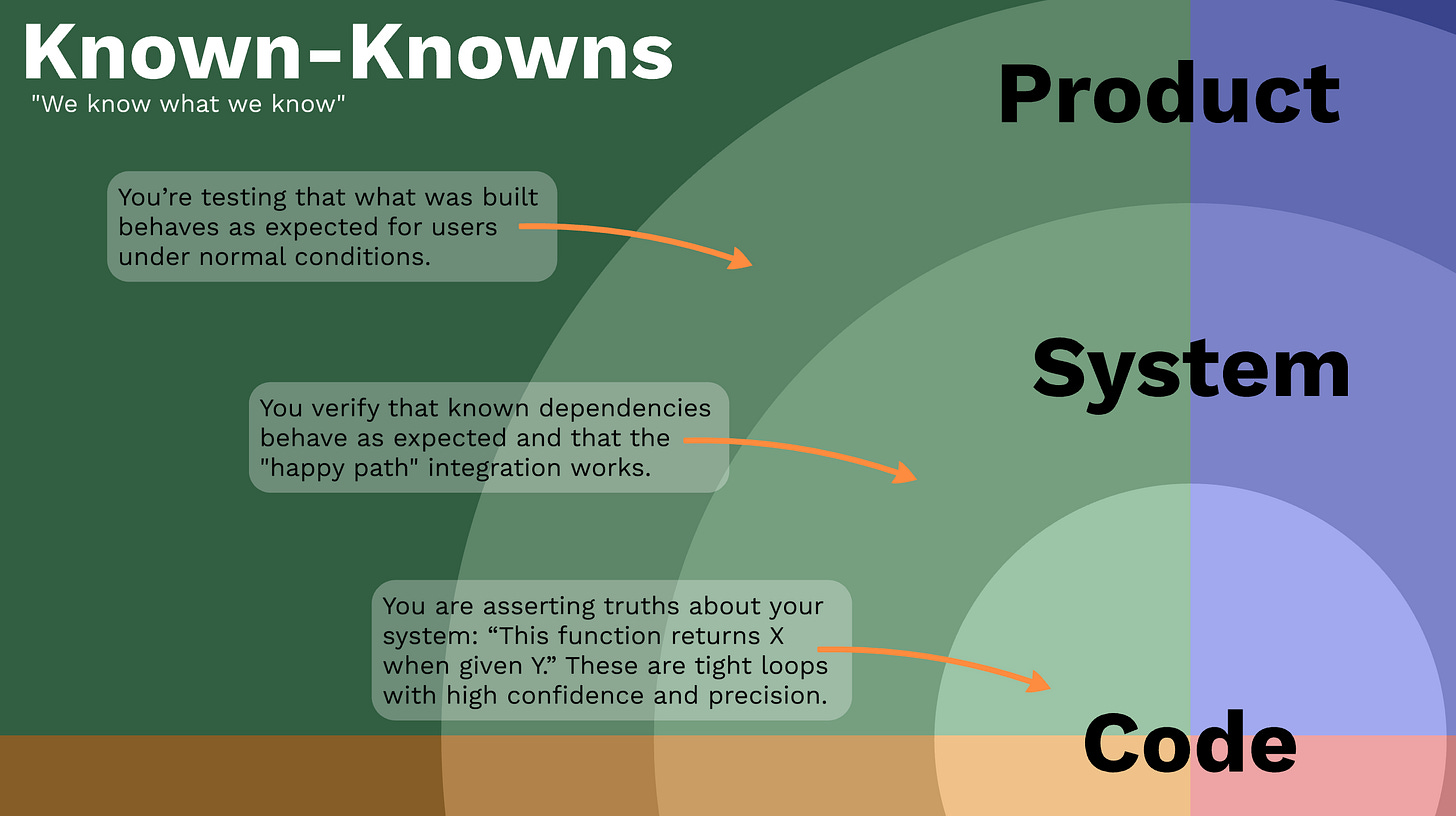

This quadrant is about confirming what we already understand about our system. It increases our certainty about its behaviour.

It’s the quadrant most teams lean on, and it’s where scripted forms of testing (automated or manual) live. These give strong feedback but usually stick to the happy path and are less effective at spotting deviations.

This is probably the most tested quadrant in engineering teams.

For a deeper dive, see Known-knowns from the Testing Unknown Unknowns post.

Inner ring: Code

Here you are asserting truths about your code in this quadrant: “This function returns X when given Y.” These are tight loop feedback with high confidence and precision.

Feedback here is that engineers are aware of what the behaviour should be and are using techniques to give them certainty of it.

Example techniques:

Unit tests, contract tests, TDD-confirming specs

Linting, static analysis, type checks

CI pipeline failures on code-level rules

Middle ring: System

You're still at the code level here, but you've widened the scope to also include 3rd party modules and frameworks you use. Here you're verifying that known dependencies behave as expected and that the "happy path" integration works.

Example techniques:

Non-UI End-to-end tests verifying expected workflows

Alerting on known failure points (e.g. retry limits, 5xx rates)

Contract testing with known APIs

Outer ring: Product

This is how your end users experience your products, and you’re testing that what was built behaves as expected for them under normal conditions. Feedback techniques here can also fall into live monitoring of the system.

Example techniques:

Automated UI tests verifying intended features

SLO alerts for uptime, latency, error rates

Synthetic testing for uptime

The Known-unknowns quadrant

"We know what we don't know"