How to implement self-healing tests with AI

Part 1: the foundations of self-healing

Self-healing has long been one of the toughest challenges in software test automation. Automated tests are meant to catch real issues, but they often break when an application changes unexpectedly. Over time, these failures pile up, shifting teams from building new tests to endlessly patching old ones.

This is the paradox of test automation: it promises stability, yet it demands constant repair.

In theory, self-healing can ease this burden by allowing tests to adapt automatically to application changes. In practice, though, self-healing has been difficult to achieve. Traditional self-healing approaches rely on rigid, rule-based logic that struggles to keep pace with evolving applications and often requires intrusive adjustments to the codebase itself.

But recent advances in artificial intelligence are changing that. Large language models (LLMs), such as the ones powering ChatGPT and Gemini, offer a way to reason about context and intent, making adaptive, context-aware self-healing a realistic goal.

This series will explore what self-healing is, why it matters, and how LLMs can enable it. We’ll also work through a complete, working example of implementing self-healing from scratch. In this article (part 1 of the series) we’ll break down the challenges of self-healing and discuss the fundamental building blocks of an AI-assisted self-healing system.

What exactly is self-healing?

One of the most frustrating parts of test automation is when a test fails for the wrong reason. An updated feature may be working perfectly, but the test breaks because something minor changed, such as a label, an ID, or the shape of a response.

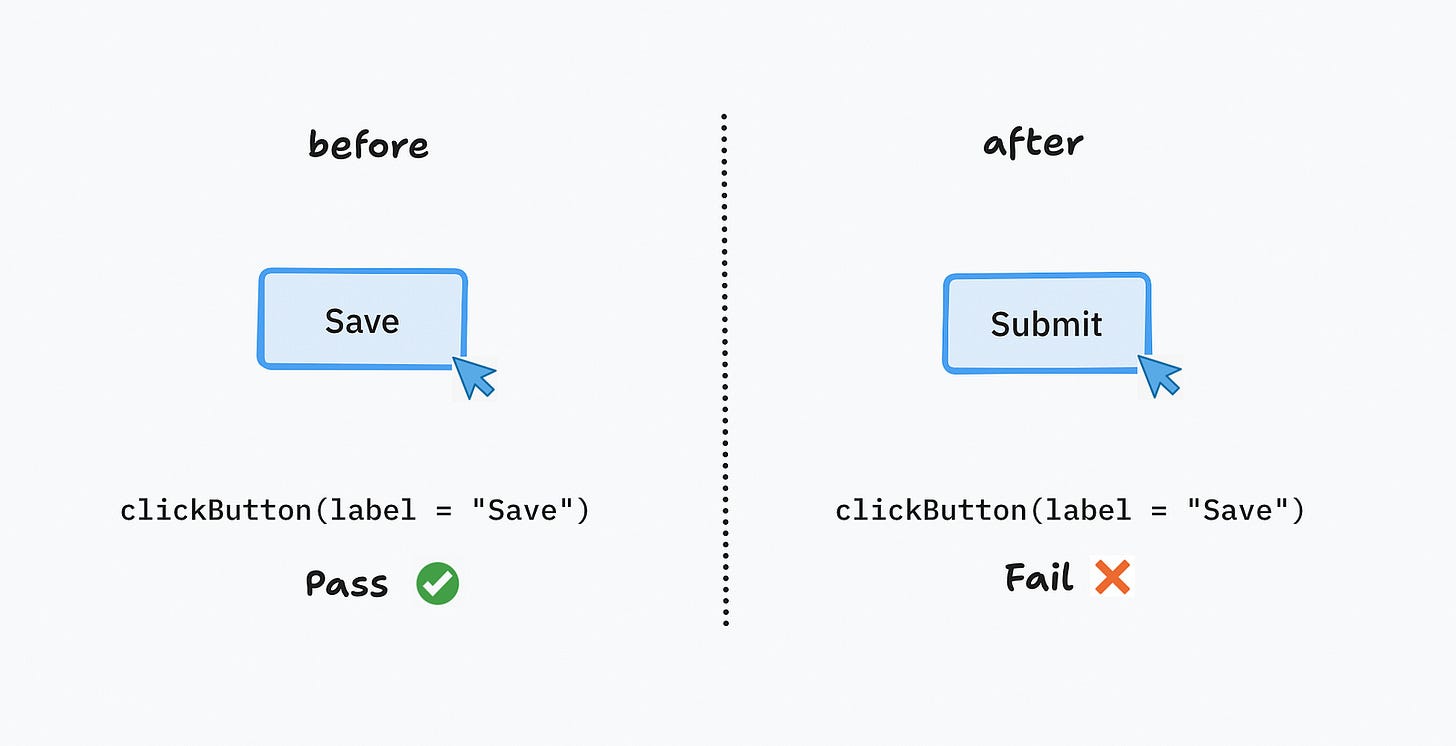

Consider a simple case. Suppose you have a test that expects a button labelled Save. A developer renames it to Submit, but everything else still works exactly the same. This causes the test to fail, not because the feature is broken, but because it was written to look for the old label.

This brittleness is everywhere. Automated tests are often tightly coupled to specific implementation details, so harmless changes like this can trigger failures. The Save→Submit example shows a UI change, but the same problem appears at every layer: a renamed input in unit tests, a restructured API response in integration tests, or an altered DOM layout in end-to-end tests.

Self-healing, or auto-healing, is the idea of breaking this cycle. A self-healing system should detect a harmless change and adjust the test automatically. In the Save→Submit case, it would recognise the new label, update the selector, and continue running without human intervention. Tests stay in sync with the application, and the burden of maintenance is reduced.

That may sound simple, but in practice, it isn’t. Let’s look at why.

Why is self-healing tough?

Self-healing is challenging because not every test failure means the same thing. Some failures signal a genuine defect in the application, while others reflect a test that’s simply out of date. To tell the difference, you need to understand what the test is verifying, how it’s written, and whether the application’s new behaviour still aligns with that intent. In short: you need context.

To understand why context matters, let’s look at the kinds of changes that typically break tests:

- Semantic changes: updates to naming or labels of objects, like renaming a button from Save to Submit.

- Structural changes: shifts in layout or placement, like moving a button to another part of a form.

- Behavioural changes: modifications to the logic or flow, like altering when a button can be clicked or what action it performs.

Any one of these changes, or a combination of them, can cause a cascade of test failures even when the application still works as expected. Writing rules to handle all these cases in a clean and flexible way is difficult. Without the contextual understanding that LLMs bring, the result is often rigid with overly complex logic. In some cases, the application ends up being adapted to fit the tests, rather than the tests adapting to the application.

This is where LLMs can help. By interpreting context and meaning more flexibly, they offer a more adaptable way to keep tests aligned with the application as it evolves. With this in mind, let’s quickly examine the advantages and limitations of using LLMs for self-healing.

Self healing with LLMs

Large language models, such as the GPT family, make self-healing easier by reasoning about the intent and context of a test, rather than relying on complex hardcoded rules. At their core, LLMs are trained on vast amounts of text and code, which gives them the ability to “fill in the blanks”. They can infer meaning from partial information and suggest likely continuations. In practice, that means they can often detect what a test is trying to achieve and propose a fix when it breaks.

But LLMs bring their own challenges. The first is hallucination. A model may suggest selectors, attributes, or code changes that don’t exist. Worse, it has no real way of knowing it’s wrong. Left unchecked, these “fixes” can create tests that pass for the wrong reasons or fail in new ways — the very problem we’re trying to solve.

The second challenge is context management. With too little context, the model doesn’t have enough information to make a good decision. With too much, the output becomes unreliable, slow, or costly. Giving the model just enough detail to be useful without overwhelming it, is a key design consideration when building a reliable self-healing system.

We can mitigate these challenges by adding guardrails in a well-considered self-healing system. By grounding model suggestions in real application data and carefully managing context, we can take advantage of what LLMs do well while reducing the risk of hallucinations or noisy outputs. But, the real question is how to bring these ideas together into a system that actually works. That’s what we’ll look at next.

Designing a good self-healing system

A reliable LLM-powered self-healing system rests on three core stages: Context Composition, LLM Evaluation, and Validation. Together these stages form a feedback loop that diagnoses the cause of a test failure, proposes a fix, and validates the fix before accepting it. This loop is designed to address the two main challenges of working with LLMs: managing context effectively and reducing the risk of hallucinations.

The diagram below shows a simplified “happy path” workflow. It illustrates how a harmless application change, such as a renamed button label, is detected and corrected with the help of an LLM. While real-world self-healing systems will require more logical layers (such as handling a true positive or handling incorrect fixes), this example captures the foundational building blocks you‘ll need before developing for more complex cases.

What’s striking about this three-stage loop is how general it is. The pattern isn’t limited to self-healing tests; it offers a practical way to bring more reliability to AI systems. LLMs remain probabilistic, but the loop of context, evaluation, and validation forces their outputs into a process we can check and control.

Practice example

To see how the flow works in practice, let’s use a simple example with TodoMVC and Playwright. You don’t need to be deeply familiar with Playwright to follow along.

Here’s a test that tries to add a new todo item by filling a input placeholder called What needs to be done:

// tests/todo.spec.ts

import { test, expect } from '@playwright/test';

test('adds a new todo item', async ({ page }) => {

await page.goto('https://todomvc.com/examples/react/dist/');

await page.getByPlaceholder('What needs to be done?').fill('Buy milk');

await page.getByPlaceholder('What needs to be done?').press('Enter');

await expect(page.getByText('Buy milk')).toBeVisible();

});Now imagine the placeholder changes to Add new task. The functionality is the same, but the test fails because it was written for the old label. This is where our three-stage loop comes in: Context Composition → LLM Evaluation → Validation.

1. Context Composition

The process begins when a test fails in a continuous integration (CI) pipeline. In this case, Playwright throws an error like:

Error: Strict mode violation: locator("placeholder=What needs to be done?") resolved to 0 elements.That failure triggers the self-healing loop. The pipeline captures relevant details about the failure:

{

"testName": "adds a new todo item",

"failingStep": "page.getByPlaceholder('What needs to be done?')",

"errorMessage": "Strict mode violation: locator('placeholder=What needs to be done?') resolved to 0 elements",

"errorLine": 3,

"domSnapshot": " ...",

"testFile": "// tests/todo.spec.ts ..."

}Good context filters out noise so the LLM focuses only on high-value information. This directly addresses the challenge of managing context effectively.

2. LLM Evaluation

Next, the system sends this context to an LLM such as GPT-4. The request has two parts:

- A system prompt with instructions, directing an AI model how to behave:

{

"role": "system",

"content": "You are an automated test repair assistant for Playwright (TypeScript). Only adjust selectors - no business logic changes."

}- A user prompt with the failure context:

{

"role": "user",

"content": {

"context": {

"testName": "adds a new todo item",

"failingStep": "page.getByPlaceholder('What needs to be done?')",

"errorMessage": "Strict mode violation: locator('placeholder=What needs to be done?') resolved to 0 elements",

"domSnapshot": " ...",

"testFile": "// tests/todo.spec.ts ..."

},

"task": "Propose minimal edits to fix the selector while preserving test intent. Respond as JSON with `rationale` and `changes`."

}

}The LLM analyses the context and proposes a targeted fix:

{

"analysis": "The test expects the placeholder 'What needs to be done?', but the DOM shows 'Add a new task'.",

"fix": {

"file": "tests/todo.spec.ts",

"line": 50,

"column": 7,

"oldCode": "await page.getByPlaceholder('What needs to be done?').fill('Buy milk');",

"newCode": "await page.getByPlaceholder('Add a new task').fill('Buy milk');",

"reason": "The selector needs to be updated to reflect the new placeholder."

}

}3. Validation

Finally, the proposed fix is validated in the CI pipeline:

- Apply the changes on a temporary branch.

- Re-run the failing test.

- Confirm that it now passes.

If validation succeeds, the system raises a pull request for human review:

{

"title": "Self-healing update - fix placeholder selector in todo.spec.ts",

"body": "Updated placeholder from 'What needs to be done?' to 'Add a new task' based on DOM snapshot analysis. Validated in CI.",

"branch": "self-heal/update-placeholder",

"base": "main"

}This workflow shows how the self-healing loop takes a brittle test, diagnoses the cause, proposes a fix, and ensures the change is safe before it is accepted.

Wrapping up

Thanks for following along. If some of these ideas feel new, they’ll quickly become familiar as you spend more time with AI-driven tools.

In this first part, we looked at what self-healing is, why it has been so hard to achieve, and how LLMs can change the equation. We sketched out a three-stage design that lays the groundwork for moving into implementation: Context Composition, LLM Evaluation, and Validation.

Part 2 will be more hands-on: a working repo with Playwright tests and a GitHub Actions pipeline you can clone and run. The ideas here will start to take shape in actual code.

If you enjoyed this article, please join my substack newsletter — refluent. You’ll also be notified when the next article is published.

Refluent shares sharp insights on quality engineering, performance testing, and the future of intelligent automation.

How to implement self-healing tests with AI was originally published in refluent on Medium, where people are continuing the conversation by highlighting and responding to this story.