The Software Feedback Rings: A New Way to Visualise Feedback

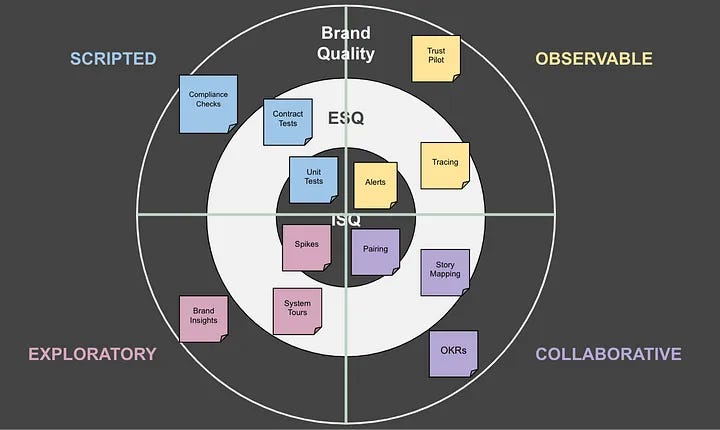

In June, I attended the British Computing Society Software Testing Conference in London, and during one of the talks, "Testing: a solved problem in engineering?" by Callum Akehurst-Ryan, I was introduced to the Quality Radar created by Nicola Sedgwick.

It's a great tool that visualises how much testing you are doing and what types of techniques you are relying on, which helps you spot gaps and prompts you to think about what techniques could help you fill them.

However, Callum’s talk got me thinking about the unknown areas of a system and how different techniques can help uncover them. It also made me wonder if this is really just a quality radar. Could we reframe it as a feedback radar? That shift would open it up beyond testing to include other feedback mechanisms, helping us think more holistically about learning and improvement.

Software feedback rings

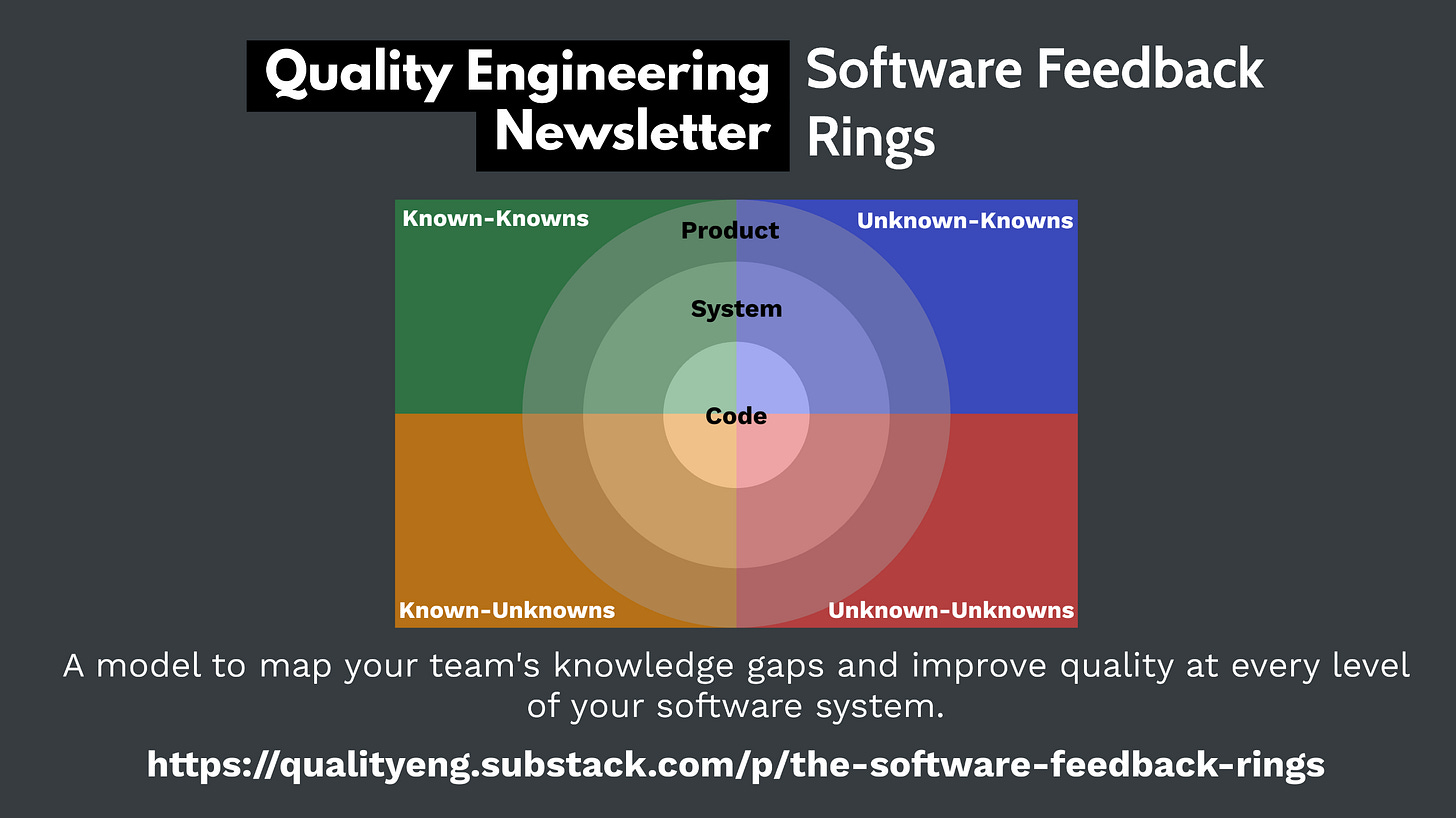

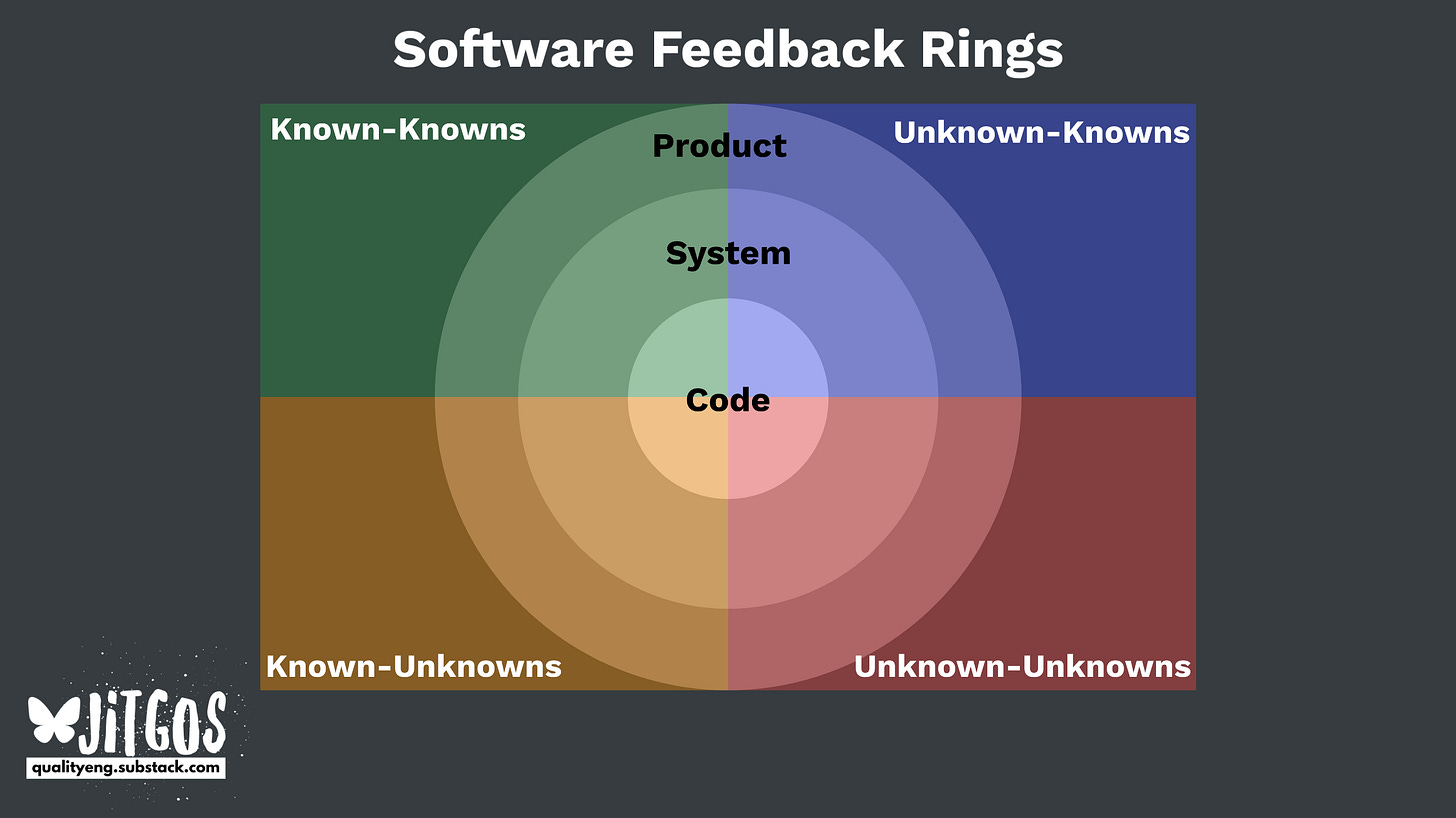

To reframe the Quality Radar, I propose a new model: the Software Feedback Rings. This model uses two different concepts to help teams get a more holistic view of their system.

Feedback about what? Uncertainty

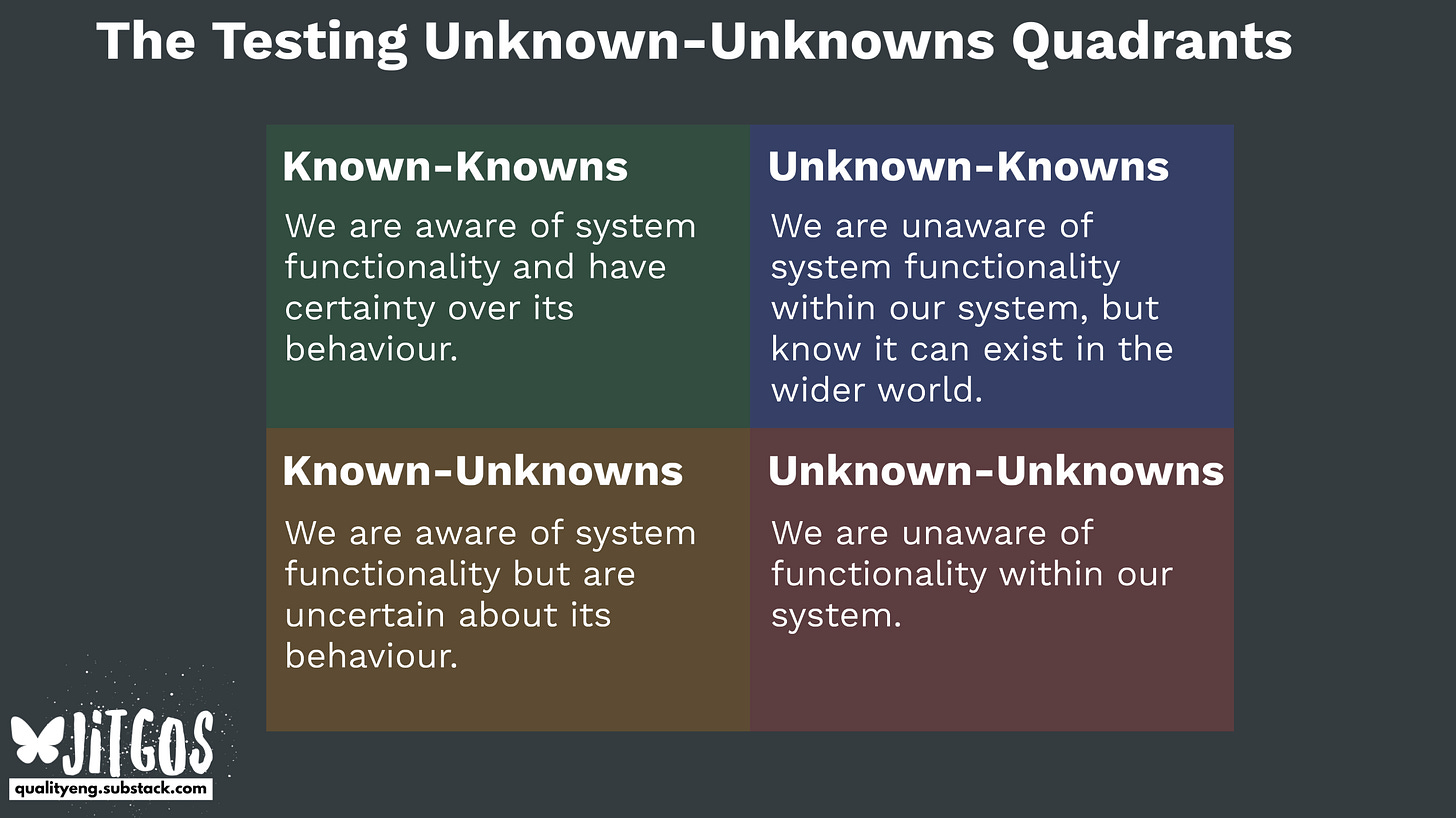

First, what types of feedback are we trying to get? From the conclusion of The Testing Unknown Unknowns:

"Categorising uncertainty into the four quadrants of known-knowns through to unknown-unknowns helps people see that they will have gaps in their knowledge about their software system. Each quadrant is a nudge for teams to think about other ways to increase their awareness about their systems..."

We can use each of the unknown-unknown quadrants to help engineering teams identify gaps in their feedback on how the systems behave. These will form the quadrants of the rings, more on this later.

Uncertainty about what exactly? Software system behaviour

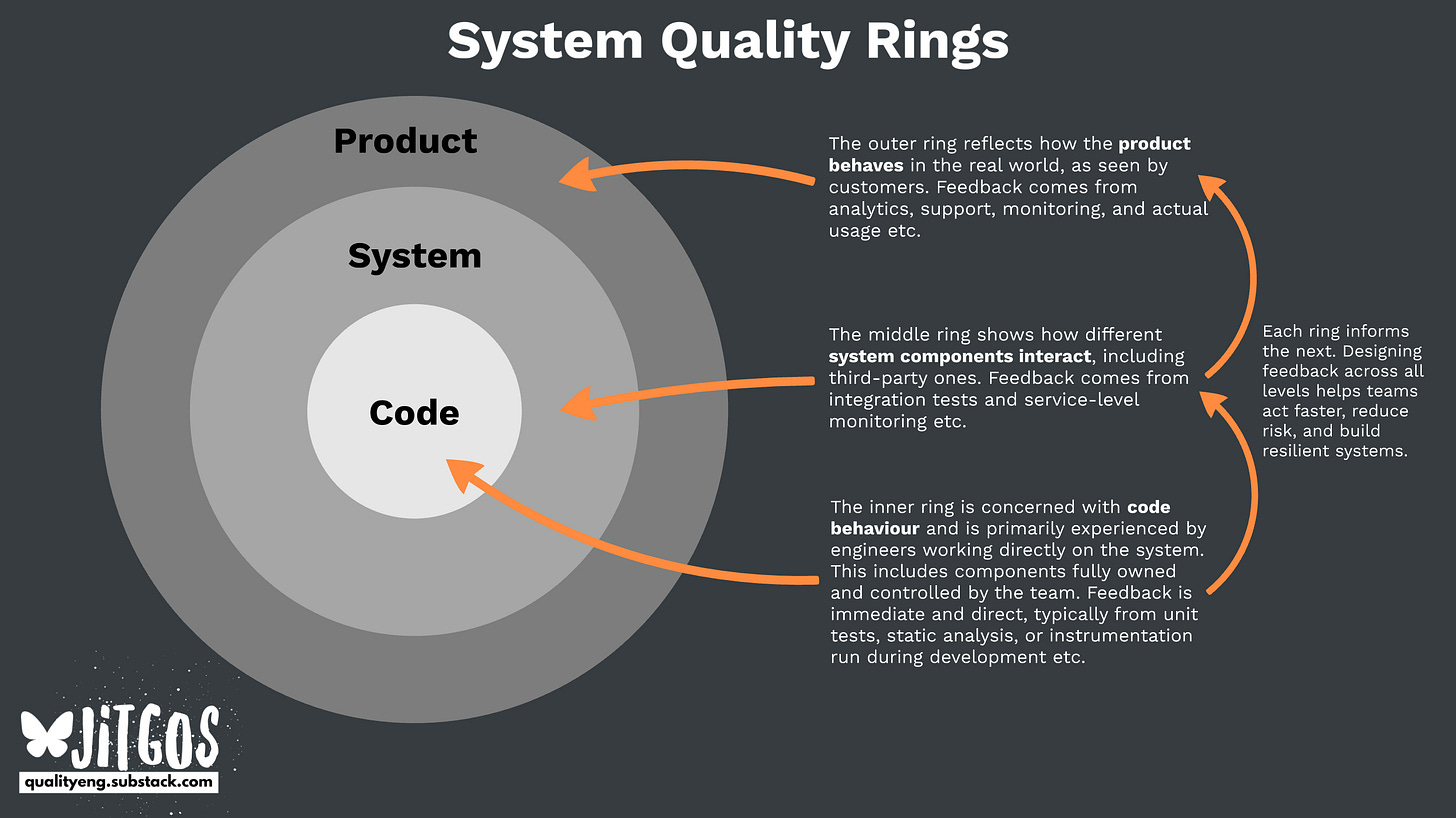

A software system can be quite big, with some parts wholly controlled by the team and others that they can only influence. So, how do we know which approaches to feedback should be used in which situations? Well, this is where we can use Google’s theory of quality of code, system and product quality, from How to build high-quality systems with Google's theory of quality:

"Breaking product quality down into three distinct areas of code, systems and product has quite a few benefits when considering building quality in at the product level.

Code quality allows engineering teams to focus on what they have direct control over. Concentrating on their area of control helps aid discussion on how they can make their code more maintainable with the objective of increasing their velocity.

System quality helps teams to start thinking about what quality attributes their organisation cares about. Breaking down quality into its attributes can help teams begin to connect how code quality affects system quality. This nuanced view of quality also helps the organisation see what the team doesn't control and what their dependencies are in a way that links to what the organisation values.

Product quality helps teams and organisations start thinking about quality attributes their end users care about. Teams can use product quality attributes to see how changes to the code and system could improve product quality for end users and customers.

What I like about these three different quality categories is that it's looking at quality from three different perspectives. Code quality is quality as perceived by developers. System quality is quality as perceived by the organisation, and product quality as perceived by the end users"

But how do we use both approaches? Well, this is where we look at the quality radar from a slightly different angle.

Switching up the zones and quadrants

The rings

For the rings, I'd like to shift from internal system quality (ISQ), external system quality (ESQ), and brand quality to code, systems, and product, to come more into alignment with the theory of software systems quality. To be fair they map in almost the same way I just prefer the terminology and the boundary that code (what we control), system (3rd party frameworks and code) and product (how the product performs in production).

The quadrants

For the quadrants, we use the testing unknown-unknowns over exploratory, scripted, observable and collaboration. Again, I think these quadrants are good and help think of the different types of testing at each of these zones. However, I believe the unknown unknowns help teams think more critically about what they do and don't know, and how they can go about discovering that information.

Combining the quadrants and the rings

If you overlay the two, you get the Software Feedback Rings:

The inner ring is focused on how the code behaves and is primarily experienced by the engineers of the system. This is code that the engineering team has complete control over. Feedback here is typically provided directly from the code, either through tests or instrumentation.

The middle ring is focused on system behaviour and focuses on the software system as a whole, including integration with third-party systems. This is code that the engineering team have influence over but not always direct control. This is typically your dependencies. Feedback is usually from integrated end-to-end systems, but not the delivered systems. Expect to see the use of stubs for 3rd parties.

The outer ring focuses on product behaviour and is primarily experienced by customers or end-users of the system. This is the product or service that is delivered. Feedback here is usually from external systems.

Don't Miss the Next Post

I'll be diving deeper into how to use the Software Feedback Rings in a future post, providing a practical guide for your team. Make sure you don't miss it by subscribing today.

How do the quality radar and software feedback rings differ?

The quality radar is great at helping teams understand how they are currently inspecting for quality. It also helps them understand where they could improve by utilising the 4 quadrants of exploratory, scripted, observable, and collaborative approaches. What I really like about the radar is its ease of use, which allows QE’s to quickly understand where the team is.

Where the software feedback rings differ is that it brings a systems thinking approach to understanding where the team is (via code, system and product). How the team is currently receiving feedback about different areas of their software system. The type of uncertainty that feedback is helping them reduce, and where else they could look to further lower their uncertainty about the software system's behaviour.

The trade-off, though, is that explaining each of the rings and unknown-unknowns takes more effort and can be confusing if the team have not heard of it before. Additionally, the software feedback rings don't indicate the types of feedback each segment provides, so teams may need more support to do this. Whereas the four quadrants of exploratory, scripted, observable, and collaborative give a big clue as to the types of approaches to getting feedback.

Which is better?

Well, neither, they are different and depend on what you're trying to achieve.

What the software feedback rings and quality radar do is help teams better understand their current state. But they both come at the what else could you be doing from different angles.

The software feedback rings look at software systems from an uncertainty perspective and help teams identify the type of feedback that reduces it.

Whereas the quality radar looks at the system from an exploratory, scripted, observable and collaborative perspective, which strongly hints towards the outcomes you're looking for.

So you could say that software feedback rings is about lowering your uncertainty, and quality radar is about encouraging teams in a particular direction.

I feel the software feedback rings require a more structured approach for teams being introduced to the concepts within it. Whereas quality radar is probably easier to comprehend.

However, my preference is for the software feedback rings, as they can be a great starting point for teams to think about uncertainty and how they can obtain feedback to help mitigate it. Which can be a great way to get teams talking about testing, without ever having to say testing.

Let's build on this together

This model serves as a starting point, providing a way to reframe our thinking about quality and feedback. My goal is to refine it and create a practical guide for engineering teams.

What are your initial thoughts? Are there other frameworks that you think would complement this model well? What feedback mechanisms would you place in each ring?

I'd love to hear your ideas. Leave a comment or reply to this email to join the conversation. Your feedback will help shape the next post in this series.