Amazon’s journey with mobile testing infrastructure

If Medium puts this content behind a paywall, you can also view it here (LinkedIn)

Every day, thousands of engineers run millions of tests to validate the Amazon Store works as expected. We aspirationally follow the test pyramid, having the bulk of our test coverage coming from unit tests, followed by a strong layer of component and integration tests for each service.

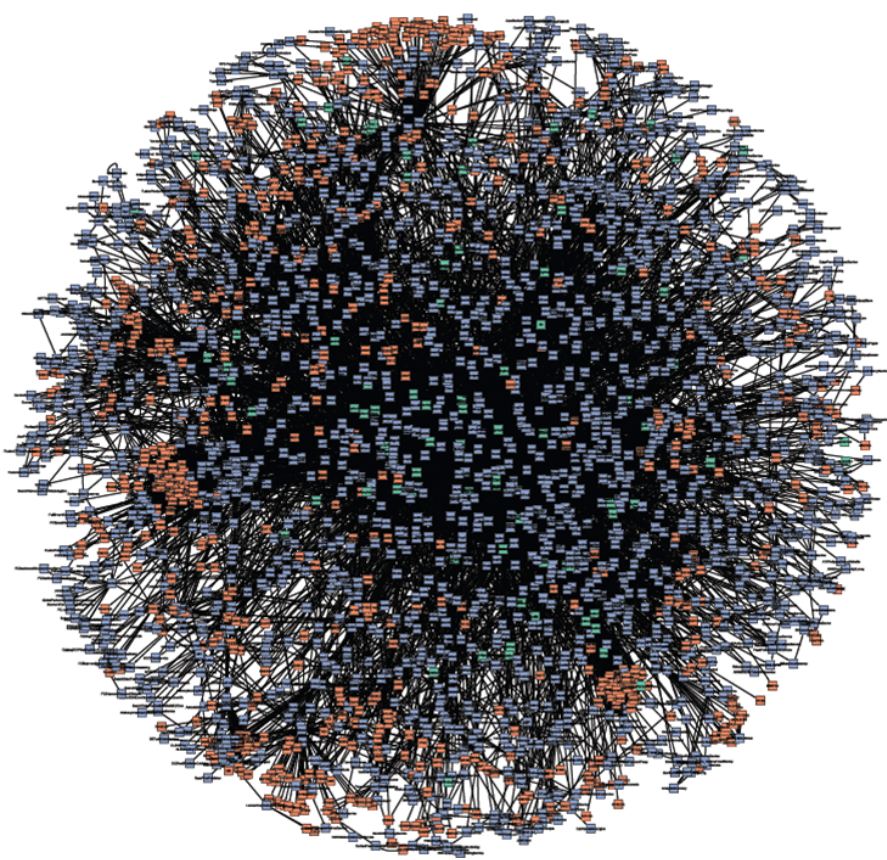

However there are some things that we can only test end-to-end (“E2E”), at the system level, in the UI. Additionally, in large distributed systems, the most interesting bugs tend to live in the way components interact with each other, particularly at scale, which makes E2E even more critical. This is what amazon.com looked like the day I was hired, sixteen years ago (every dot is some sort of service), and it hasn’t gotten any simpler to serve traffic since:

Since most of our customers are accessing the Store via our mobile app, that means running all our critical end-to-end tests on mobile devices. Our testing has to reflect the way our customers are using us.

Amazon Store engineers have always tested local changes both via their own physical devices, and via virtual devices (by spinning off an emulator or simulator in their dev environment). And this works well for a single developer. But how do we evolve our device test infrastructure to be able to do this efficiently and at scale: thousands of engineers, thousands of commits, thousands of tests, hundreds of devices?

First: We created labs of physical devices

To run all our critical tests on mobile devices, we maintained vast labs of physical devices (iOS, Android) throughout the Amazon campus.

Maintaining these labs is cumbersome and expensive. Our engineers need to spend their time keeping track of the health of the devices, and fixing them when they have issues. Unlike commercial servers, consumer devices are not built to have the kind of reliability required to be running 24x7 in labs, so keeping hundreds or thousands of phones healthy becomes a scaling bottleneck. Lab management is non-differentiated work for the majority of us: we want to spend our time writing great tests to ensure Amazon products provide our customers with the highest quality experience, not maintaining devices in labs.

There are other problems too. It isn’t always easy to get our hands on devices for all the configurations we want. As more versions of iOS and Android devices reach the market, that matrix of must-have devices grows very large. These physical labs also can be wasteful, as most of the devices can sit idle for the majority of the time, only to run a test occasionally.

Despite all these challenges, physical device labs continue to be critical to us in many business (and will always be). How does Amazon test “Living Room” devices? Smart TVs (like Samsung, Sony, LG, etc); streaming devices (like Fire TV sticks, Rokus, etc); game consoles (Playstation, XBox); set top boxes, blu-ray players, etc? How do you test prototype devices that will reach the market years from now? A few months ago, I visited our Prime Video London offices and posted about it here.

But for off-the-shelf mobile devices, we needed to think differently about our test infrastructure, and so we reached our first inflection point.

Then: We moved to AWS-managed physical device infrastructure

This is when we started shifting a lot of our testing to run on AWS Device Farm. This allowed us to run our tests across an extensive range of desktop browsers and real mobile devices, without having to provision and manage any testing infrastructure. We were able to offload the non-differentiated lab management work to AWS, which freed our engineers to spend their time improving the actual tests. Additionally, by using Device Farm we were able to only pay for the devices that we used, instead of having giant labs with mostly idle devices, and benefit from their extensive device coverage.

AWS Device Farm gave us the best of both worlds: we were able to run our tests against physical devices, without the burden of having to have physical devices. By leveraging AWS, were able to decommission a number of labs we owned and scale our testing significantly. Reliability of our tests improved: Device Farm runs with an SLA of 99.9%, which was prohibitively expensive for us to achieve when we operated our own device labs.

Amazon at large still does have tens of thousands of devices in private labs for testing purposes, particularly for Kindle e-readers, Alexa devices, Fire Tablets, Fire Sticks, Ring devices, etc. But for the Amazon Store, the vast majority of our device testing happens via AWS Device Farm, although we still operate a number of private device labs for very specific reasons (such as needing access to hardware).

Today, AWS Device Farm is business-critical for ensuring that changes to the Amazon Store are high quality and don’t negatively impact our customers. They have been great partners in our quality journey, and I expect that will continue to hold true indefinitely.

Now: One more inflection point ahead

At this point, we’re facing the next inflection point: there are two trends that are going to increase the scale at which we run our tests, easily by 100x.

[1] Shift-left testing

This means moving testing activities earlier in the development process, focusing on identifying and resolving issues at the earliest possible stage to improve quality and reduce costs.

Traditionally, because UI tests were expensive to run and flakey, we only ran most of them after a piece of code was submitted and merged, as part of the outer developer loop. This led us to identifying errors too late in the process. Amazon, and the industry at large, is making significant investment to shift testing left, so that we can run those same tests as part of the inner developer loop instead, and find the errors much earlier in the process, reducing the blast radius.

There’s something that makes shift-test-left an order of magnitude more important today than a few years ago:

As we increasingly use AI to generate code, providing LLMs with ways to rapidly and iteratively validate how local changes impact interconnected services and complex distributed applications, before commit, is essential.

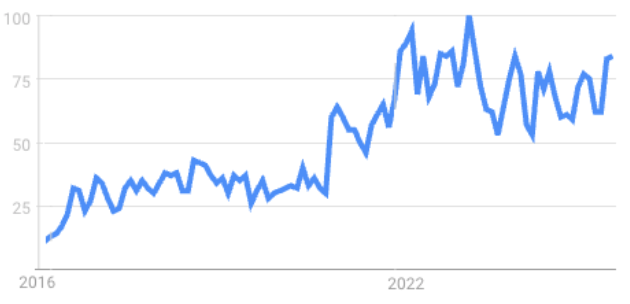

The idea of shifting left has existed in the industry for as long as I can remember, but there has been a significant emphasis on it in the last few years. A quick search on Google trends corroborates it: the following graph shows the popularity of searches for “shift-left testing” on Google between 2016 and 2025 (a value of 100 is the peak popularity for the term):

[2] LLM-based testing

Traditionally, creating UI tests has been expensive, because it required engineers with domain expertise in various UI testing frameworks (e.g. Selenium, Puppeteer, Cypress, WebdriverIO, Cucumber, Appium, Espresso, XCTest, …) manually writing these tests. They were also cumbersome to maintain, as the tests were subject to failing if the UI changed and required to be constantly modified to reflect front-end changes.

LLMs provide a way to author tests that is more natural. Engineers can write their expectations for the behavior of a system in plain English, and an LLM can interpret that and decide how to drive the UI to accomplish that desired goal. LLMs introduce the ability to analyze images and text, which makes validation simpler and more natural too. No need to couple your tests to a specific framework. A test can simply be expressed as “Go to the Amazon Store, search for Harry Potter books, and verify the books are present in the search results.” Maintenance is simpler too: because the LLMs can navigate some amount of ambiguity, they can be resilient to simple UI changes that in the past would have broken thousands of tests (eg. moving a button to a different location or renaming a UI element).

Tests being significantly easier to create and maintain frees our engineers (and LLMs) to create them at a rate that was simply unthinkable just a couple of years ago.

Combine [1] our commitment to shift-left testing broadly for humans and LLMs, with [2] a reduced cost for creating and maintaining tests, and now you understand how that inflection point is upon us: our testing infrastructure will need to run 100x more tests than we do today. With that kind of scale, cost and speed considerations, running these tests against physical devices only becomes challenging.

Augmenting (NOT replacing) physical device testing with virtual device testing

Scaling by 100x requires different thinking. This inflection point means that we need to invest in being able to run these tests at scale on virtual devices, via emulators and simulators, running on containers (e.g. Amazon ECS, Amazon EKS).

Using virtual devices is not a particularly novel concept; engineers have been testing in their inner loop with an emulator running on their developer desktop for a decade. But running emulators and simulators at scale is a new shift.

Virtual devices have the following benefits:

- Scalable: It’s a lot more feasible to spin off 10,000 emulators on containers on AWS than it is to get my hands on 10,000 physical phones

- Reliable: Virtual devices tend to be more reliable than physical devices, because they’re running on highly available servers. Physical devices are susceptible to hardware failures like getting disconnected from the wifi, a bad USB cable, etc.

- Cheaper: running a test against an Android virtual device is about 10% of the cost of running a test against a physical device — simply because these can run on containers on inexpensive AWS servers (vs. on expensive consumer devices).

- Faster: the actual runtime of a test doesn’t change whether it’s running on a physical device or a virtual device, but the setup time for the test (as well as the teardown time) can be significantly faster. (There are exceptions: physical devices are actually faster than emulators in cases where hardware matters, such as games that require and use dedicated GPUs, or the 3D scan/video playback feature on Amazon Store app).

Given that virtual devices can be more scalable, more reliable, cheaper and faster — why would we continue running on physical devices at all?

The answer is simple but critical: Fidelity.

You should use the same devices your customers use. Unlike virtual devices, physical devices give you a more accurate understanding of the way users interact with your application by taking into account factors like memory, CPU usage, battery draw, time to first frame, location, and modifications made by manufacturers and carriers to the firmware and software. Emulators and simulators may lack that fidelity, leading to issues escaping to production because they did not surface on virtual devices, but will most certainly surface on physical devices.

Hereby lies a tradeoff. Do you want scalability/reliability/cost/latency, or do you want fidelity?

My assertion is that this is not a “OR” choice, it’s an “AND” choice.

My personal view is to have a strategy that leans towards the first for some cases, and leans towards the second for other cases:

- Use virtual devices when scalability/reliability/cost/latency matter most: [i] Anything running in the inner developer loop should default to running on virtual devices; [ii] Earlier stages of CI/CD (e.g. Beta stages) should default to running against virtual devices

- Use physical devices when fidelity matters most: [i] All business critical test cases must run on physical devices; [ii] All hardware-dependent test cases, and performance/latency measurements; [iii] Final stages of CI/CD (e.g. last safety net before things are pushed to production) must run on physical devices

Here’s some more thoughts on strategy:

- Your tests should generally not hard-code or care whether they’re running on a physical device or a virtual device. In fact they should not care about what device they’re running on at all.

- In reality some tests specifically validate a particular platform, but as a general rule, decouple the “what” (the test) from the “where” (the device). This allows you to dynamically run any one test on multiple platforms without making changes.

- Specific test cases may only be able to run on physical devices (e.g. they require a specific hardware to run on), regardless of where in the software development cycle they are executed.

- Service owners may override this behavior for situations where the fidelity of physical devices trumps scalability/reliability/cost/latency concerns.

In that sense, virtual device testing is augmenting/enhancing your testing capabilities but in no way replacing the physical device testing you do. They are both part of a holistic test strategy.

The test pyramid ends up looking more like this:

Notice the percentage of E2E tests increases in this model, as a consequence of shifting testing left and LLMs simplifying the authoring and execution of E2E tests. This is positive, because it helps us prevent the harder-to-find bugs that lurk in the interaction between our large distributed systems.

I’m extremely excited about a future where testing happens ubiquitously in the inner developer loop as it does in the outer developer loop, therefore preventing issues proactively. I’m also extremely excited about how GenAI will help us increase test coverage of our products. And by scaling both our physical and virtual device testing infrastructure, I believe we’re ready for that future!