Top 3 Takeaways from British Computing Society, Software Testing Conference 2025

In June, I spent the day at the BCS SIGiST Summer Conference in London. The theme was Finding Calm in Chaos: Applying Testing to a Changing World.

It was a great mix of topics: from AI and sustainability to model-based testing and the human side of pull requests. I caught up with a few familiar faces, took far too many notes, and left with a notebook full of ideas.

Here are my top 3 takeaways and a few bonus mentions that are still bouncing around in my head.

1. Assumptions are where the testing starts

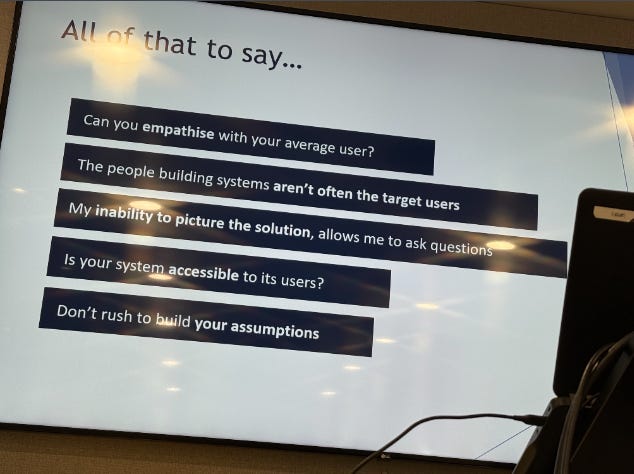

Emily O'Connor's talk was a reminder that our work often begins, not ends, with assumptions. As testers, identifying and challenging them is one of our biggest contributions and one of the most human.

With AI becoming more integrated in systems, this becomes even more important. Biases aren’t just bugs, they’re baked into data, processes, and the choices we make without thinking. Emily made a strong case for sharpening the skill of assumption-hunting, and I left wondering: could AI tools help us find assumptions as well as we find failures?

If you'd like to learn more, check out my notes from Emily’s talk: You Are Not Your Customer.

2. Is testing a solved problem?

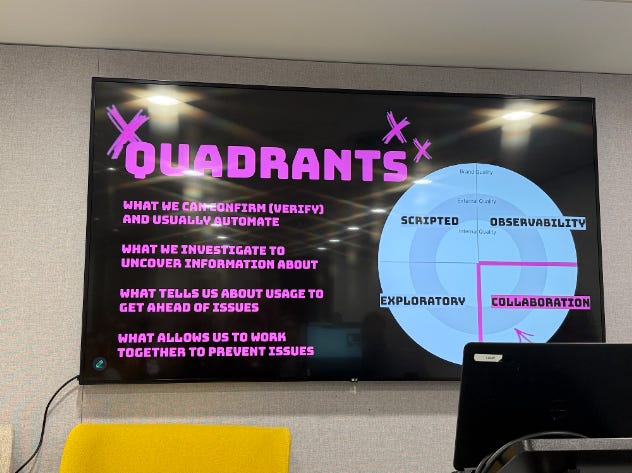

Callum Akehurst-Ryan introduced the Quality Radar. A lightweight visual to map what kinds of testing (and by extension, feedback) you’re doing across your system.

It’s simple, practical, and collaborative. A great conversation starter with your team, and a helpful way to spot gaps or over-reliance on one type of testing. It really helps teams see that testing isn’t a “solved problem,” and there are other things you could be doing.

But what if we zoomed out? I left the talk thinking it might be better framed as a Software Feedback Radar. Not just tests, but everything from logs and alerts to support tickets and user behaviour (which, to be fair, are included in the example that Callum shared). Thinking in terms of feedback makes testing part of a larger feedback ecosystem, one that’s central to learning and improving. One that shifts the narrative away from here’s another talk/workshop about testing and more towards how we understand how our software works.

I particularly like the quadrants too, especially Collaboration, which helps teams see how their ways of working help build quality in (e.g. pair programming, triads, quality mapping). But the Scripted and Exploratory could get some people hung up on this being a testing thing, not an improve quality thing. Not a major issue but if we’re trying to talk more about quality not testing then this doesn’t help.

Check out my notes from Cal’s talk to learn more about the quality radar: Testing: a solved problem in engineering?

3. You can’t test in production when you’re in space

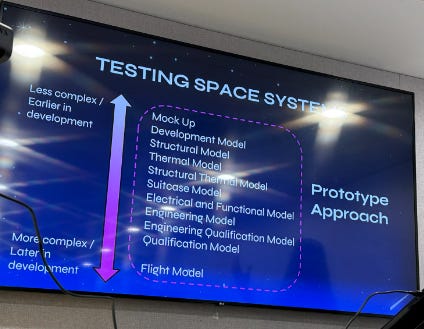

Beth Probert gave a fascinating talk about testing resilient space systems. In space, failure isn’t just costly, it’s catastrophic. That means everything must be tested on Earth, under simulated space conditions, well before launch.

Beth walked us through how model-based testing supports this process, allowing teams to anticipate and explore how systems might behave during launch or in orbit. What stood out to me was how much the space industry relies on shared learning. Production failures are openly analysed across organisations to avoid repeat issues.

The move toward standardisation - fewer custom builds, more reusable components is helping too. It reminded me just how powerful good quality engineering can be when lives (and satellites) are on the line. And reiterates why are you rolling your own when well documented and tested frameworks already exist for the same functionality?

It also made me wonder: how can we bring some of this mindset into other high-risk domains, such as healthcare, or even low-risk systems?

See my notes from Beth’s talk to learn more about Failure Is Not an Option: Testing for Resilient Space Systems.

Bonus Mentions

PR reviews as quality moments - Andrea Jensen reframed pull requests as collaborative checkpoints, not just code inspections. Her tester-focused PR approach was great and something more people need to talk about. How do QAs support Pull Requests?

Generalists for the win - Gerie Owen made a strong case for the “flexible generalist”, someone who doesn’t know everything but knows how to find what matters. It’s an undervalued strength in testing.

Retail goes green - Sivaprasad Pillai’s talk on sustainable retail systems reminded me how digital changes often benefit the business more than the customer. A good tester question: Who is this really helping?

AI changes how we think about testing - Bryan Jones highlighted how traditional approaches don’t fully apply when testing AI-based systems. With behaviour that’s probabilistic, the focus shifts from correctness to appropriateness. It’s a reminder that quality in AI isn’t just about output, it’s about trust, judgment, and knowing when to fall back to existing deterministic systems.

Final thoughts

It was a brilliant day packed with ideas, reflections, and timely reminders that testing isn't a solved problem (thanks to Callum for the reminder). It's a constantly evolving one. So, how do we keep pushing ourselves, our teams and our industry forward? By sharing and learning from others.

If you're curious about testing, AI, or how quality engineering is evolving, I’d definitely recommend attending the next SIGiST event. You can find more information about them here at BCS SIGiST. If you want to dive deeper into any of the above talks, check out my notes from the BCS SIGiST Summer Conference.