Quality Engineering in the Age of AI - My Takeaways from StarEast 2025

If there was one recurring theme at this year's StarEast, it was this: AI is here, it's moving pretty quickly, and it's not going to wait for anyone to catch up. In between all the demos, keynotes, and vendors, one thing became very clear to me: Quality Engineering isn't disappearing - it's become more important than ever!

There were excellent talks with live demos of LLMs writing code in small steps and helping debug issues. But what stood out to me was how much it still relied on the users' critical thinking to get the best out of it. The quality of these outcomes still depends heavily on the quality of our thinking.

Not just prompting - guiding

What I appreciated most was the emphasis on how we guide AI, not just prompt it. Whether in coding tools, agent-based testing systems, or low/no-code automation, the pattern is the same: the better context, constraints, and intent we give the AI, the better the results.

This shifts our work. Instead of spending most of our time writing the code or crafting the tests ourselves, we're beginning to focus on verifying AI-generated output, even creating agents to assess other agents, and designing guardrails that keep things on track. It becomes less about how we do it and more about the outcomes we want and directing the AI systems towards that outcome.

The "vibe coding" sessions reminded me that collaboration with AI is a skill and almost a form of leadership. It's about enabling conversations, feedback loops, and clear standards - especially when the tools and roles are changing.

So what does quality engineering look like in this new context?

Here are some of my key takeaways:

You can't outsource judgment. AI can write code, plan steps, and even test itself, but only humans can decide what's good enough, what's ethical, or what's risky in a given context. And it's that context that makes QE's critical: making sense of the systems we're working in and helping AI's understand it.

Agents testing agents. We need to design systems of agents, not just rely on one model. Use the right agent for the right outcome, and test agents with other agents, guided by domain expertise. I don't think it's going to be a one-LLM-fits-all situation and can see QE's leveraging multiple systems.

Refocus on communication. Whether it's explaining what good looks like to an AI or to a junior engineer, clarity is key. Communication is going to be a core skill within engineering. The better you are at articulating yourself, the better the outcomes you'll produce.

This isn't about learning AI for the sake of it. It's about rethinking how we bring quality into systems that are no longer fully deterministic or human-authored. The better we understand how quality is created, maintained, and lost within complex software systems, the better we will be able to direct AIs to build quality in. That's the work of quality engineering, and it's more relevant than ever.

Quality Engineering Newsletter is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Highlights from the talks I attended

Here are some moments that stood out, follow the links for more detailed notes.

Keynote: How to get AI and agents to stop being weird

Dona had a strong take on what “agentic” actually means. A lot of what we call agentic AI today is just automation like sending an email with a new recipe every Monday. True agentic AI reasons, adapts, and uses automation to achieve outcomes, not just waiting for us to direct it.

Keynote: Time for Vibe Coding

Malissia did a live demo using Cursor IDE. She showed that by working in small steps, giving AI detailed context, and constraining the AI’s actions to manageable steps, we can get useful tools even if they’re not production-ready.

I really liked the part where she asked Cursor to create five test plans in the style of five well-known testers, then chose one and iterated on it to achieve her test strategy.

AI-ML SDET: A title change or a new role?

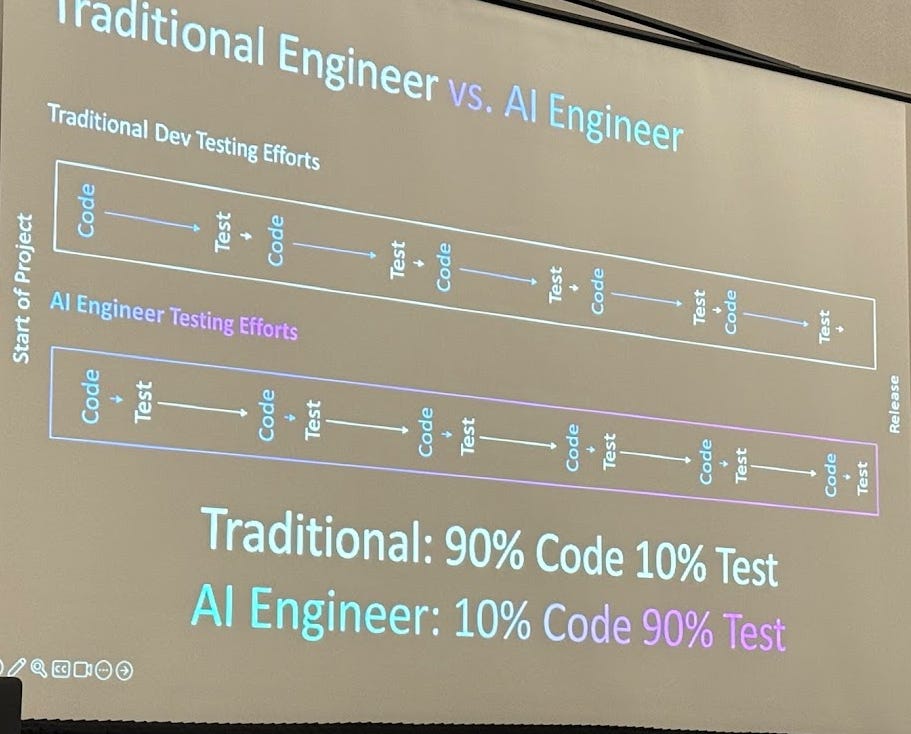

Titles aside, the shape of the work is shifting. We’re going from spending 90% of our time writing code and 10% verifying to 90% on verifying what AI produces and 10% writing code, plus implementing guardrails to stop things from going off track.

Hiring the Best Quality Engineers

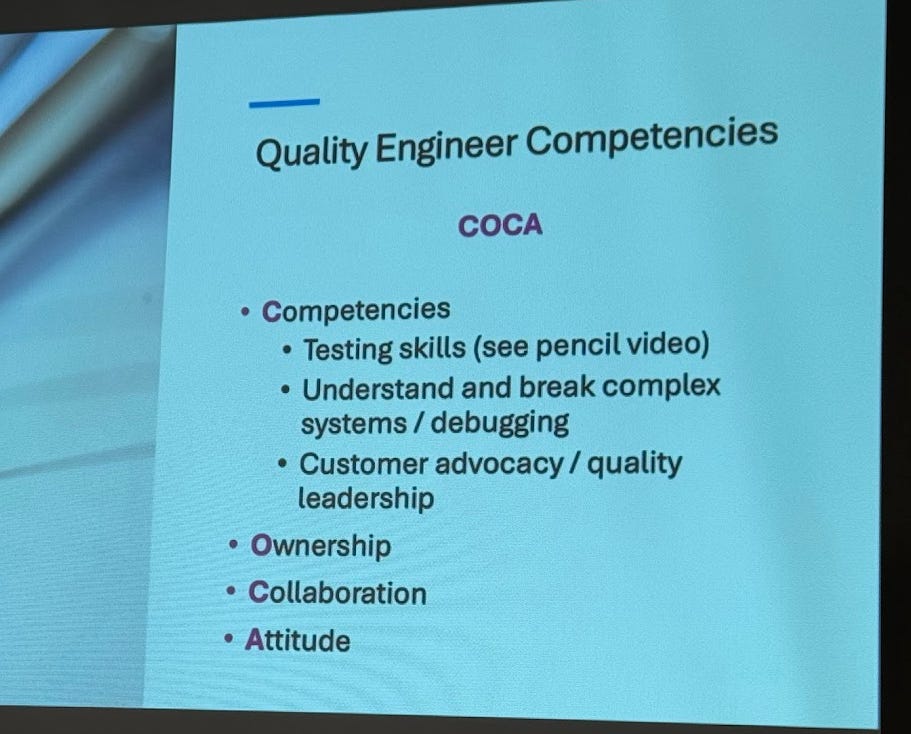

This was a practical session with good stories and takeaways. The COCA model (Competencies, Ownership, Collaboration, Attitude) helped explain what you’re looking for and how you’re assessing candidates.

I realised I’ve been using a similar lens myself, but hadn’t made it explicit for others. COCA is a good approach to making your criteria more explicit. Also, the best source of hires is internal referrals, and they're worth incentivising - it’s their reputation on the line, after all.

Panel: The impact of generative AI on quality engineering

Domain experts (or red teamers) are often the best people to test AIs especially when the AI is trying to act like them. But those experts still need testing skills - thats were QE's come in.

Build your own Agents. Lean into your niche and create tools tailored to it. As quality experts, you can evaluate and improve these tools to benefit yourself and others.

Prompting matters. Language shapes behaviour, so we need to consider how we influence AI systems through our communication with them. You use biased language, get biased output.

Demystifying AI-Driven Testing

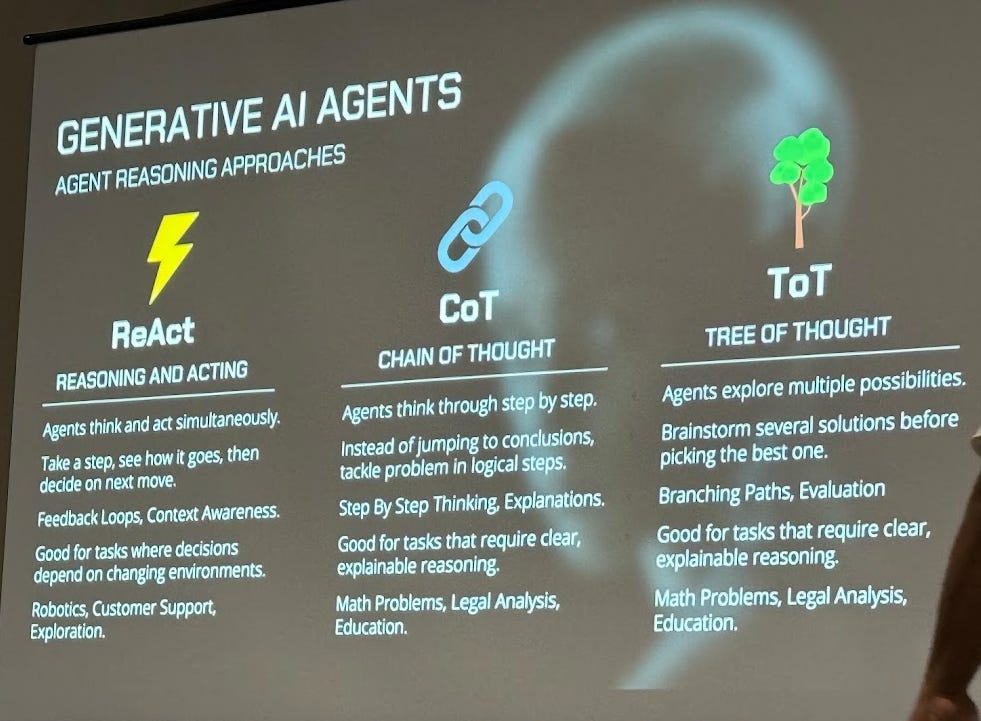

I hadn’t seen the different agent reasoning models before (reasoning and acting, chain of thought and tree of thought). Not every agent gets to the answers in the same way, so we need to use multiple approaches depending on the outcome we want.

And who tests the agents? Well, other agents. Building evaluation agents seems inevitable if we want to scale this. Manually testing everything just doesn’t hold up.

Enhancing skills for effective collaboration and leadership

A good reminder that individual contributors often don't get much support in building communication skills. The speaker had seen big improvements by running workshops on things like candid conversations, communicating with impact, and the “six hats” of engineering. Definitely something more teams could invest in.

Closing panel: The future of test automation

Low/no-code automation works for getting started, but scaling still needs coding skills.

Manual testing still matters for UX, accessibility, and exploration.

Dev unit testing should be incentivised and expected.

Automation ROI is best measured in terms of feedback loops, not just coverage.

Slack time in teams is crucial for automation to flourish. Start small and build up.

Final thoughts

AI isn’t replacing testers - it’s reshaping what testing looks like.

It’s putting more weight on judgment, systems thinking, and clear communication. It’s challenging us to be clear and more concise about the outcomes we want and how to achieve them. I feel this is opening up the space for QEs to lead.

We might not be writing all the code or the tests anymore. But we are making sure the right things happen to build quality in, and that’s never been more important.

Want to learn more?

Checkout out Notes from StarEast 2025 where I share my personal notes from my favourite talks.

Thanks for reading Quality Engineering Newsletter! This post is public so feel free to share it.