A Fable about GenAI

I’m fortunate enough to be part of two massive online communities. One, the massive tech community that is, often blithely, unaware of it’s overall impact to nearly everything today as it happily creates things for little else other than to make something.

And two, the bookish/publishing community that includes everyone from influencers and creators on Booktok to indie authors and traditional publishers often referred to as the “Big Five” in mostly ominous tones.

Thanks for reading Quality Technologist! Subscribe for free to receive new posts and support my work.

Since GenAI was unleashed in the public market, these two communities have been at war with each other whether they realize it or not.

Tech companies of all flavors and domains are racing to add some kind of LLM/AI assist to their apps without really thinking through the possibilities related to governance and data.

To generate custom messages and responses, you have to have a large data set. Most of these are supplied via scrapes of the internet AND (drum roll) Social Media.

Creatives in books, movies, television, and animation have a sudden fight on their hands about content being created by non-humans. Content mostly under copyright laws being leveraged to train models.

With this conundrum, companies have retreated to things they can control. Their own customer data - which leads us to social media. ALL social media and the update of TOS to include ownership of anything you post.

If you didn’t know, social media mostly works on outrage. The bigger the outrage the more clicks people usually make. It doesn’t actively promote pleasant content. It’s really hard to actually create a timeline of pleasant content. It’s news media mentality magnified by a thousand.

Which then brings us to what happened with Fable and Facebook.

A lot of influencers and creators around books and publishing have learned the lesson of diversifying their media contact points since Musk bought Twitter. Folks now have multiple accounts and ways to engage with their followers.

One of the platforms that has become popular in the bookish communities is Fable.co

Fable.co is an interactive book club platform. It can also track reading and has community functions that a lot of folks use for any number of reasons.

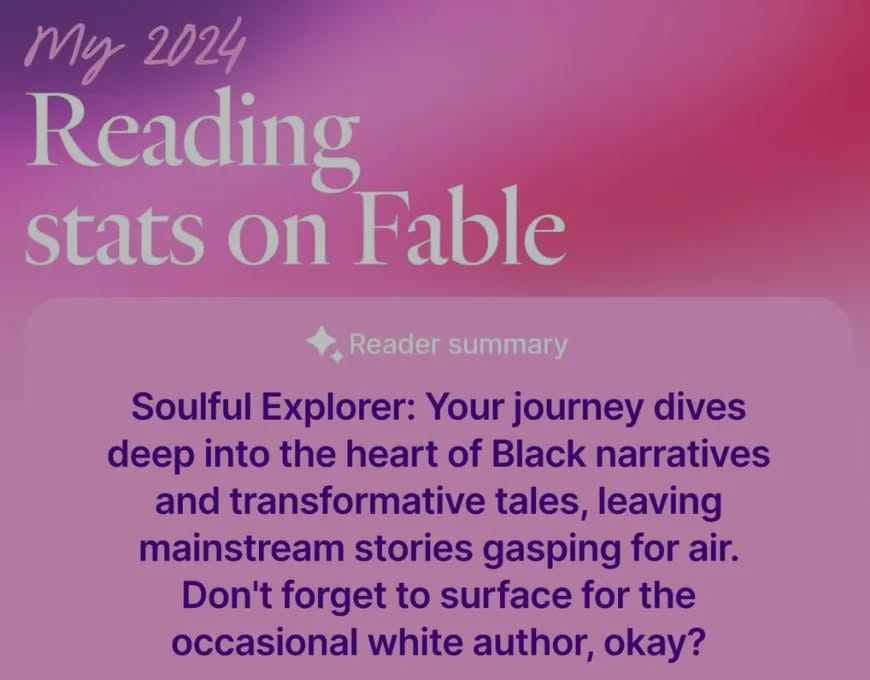

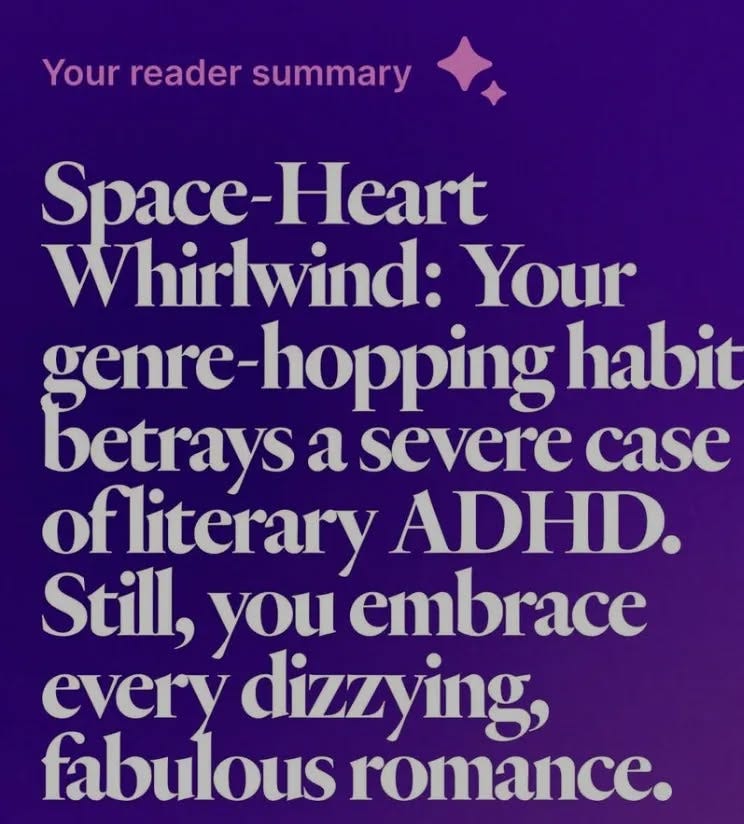

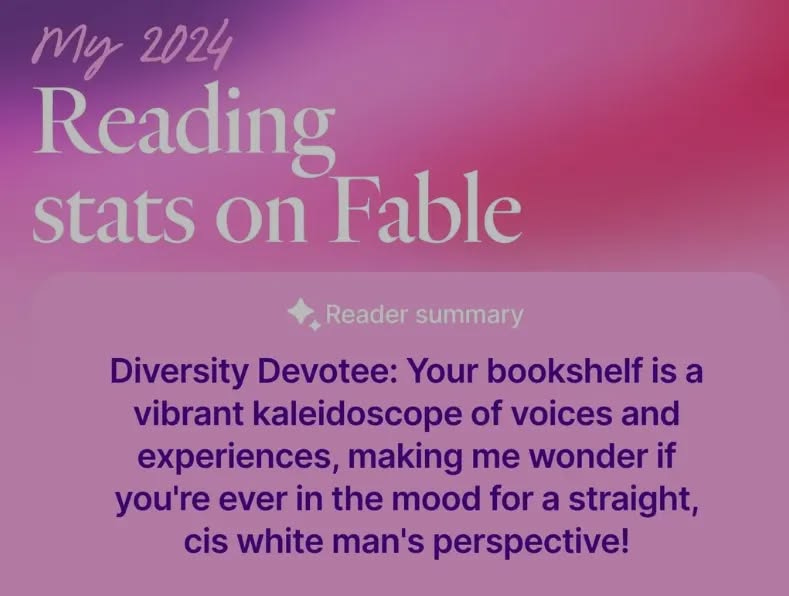

On December 31st, it’s GenAI feature created year-end paragraphs that wrapped up what readers were engaged with in 2024.

Here are some Fable examples:

:

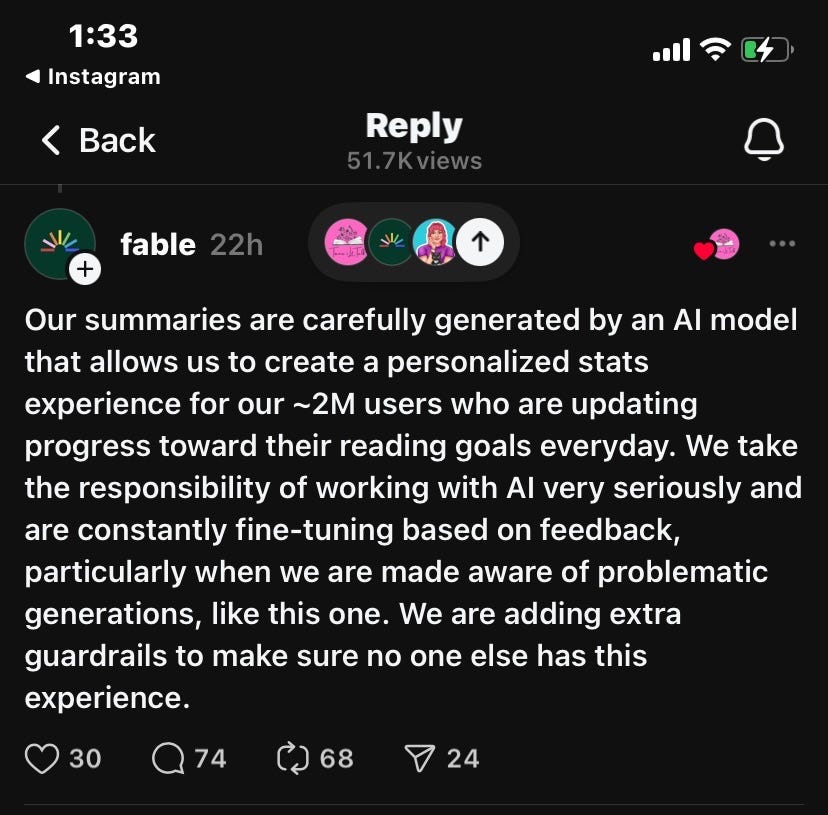

Fable’s response:

Check out the story from MNBC here about Meta AI accounts.

Post from Karen Attiah chat’s with “Liv”

We’ve known for some time that algorithms have been fairly bias. (Read Algorithms of Oppression: How Search Engines Reinforce Racism). GenAI/LLMs are BUILT on data created by algorithms. Whatever app you use no matter how innocuous uses algorithms (often called services) to transform data to fit the program’s needs.

Data already built with a bias will see that bias magnified in ways that are unpredictable at best. Fable.co has run afoul of just that problem exactly. Meta definitely showed it’s true colors having a “queer Black woman” persona created by a team of mostly white men.

I have no idea if Fable’s response was also generated by AI, but it does have that feel of being a long winded response on social media with little substance and a serious lack of apology.

I think 2025 will see more of these issues. This might not be a bad thing, to be honest. It’s exposing to a larger audience what many marginalized folks in tech have known for years and companies have ignored or quietly updated.

Problem with GenAI, you might not find all the issues with the data sets until its caused a PR nightmare like these unless you create and control the data model yourself. And then, it’s still on you to make sure it’s not leaning into the most horrible parts of humanity.

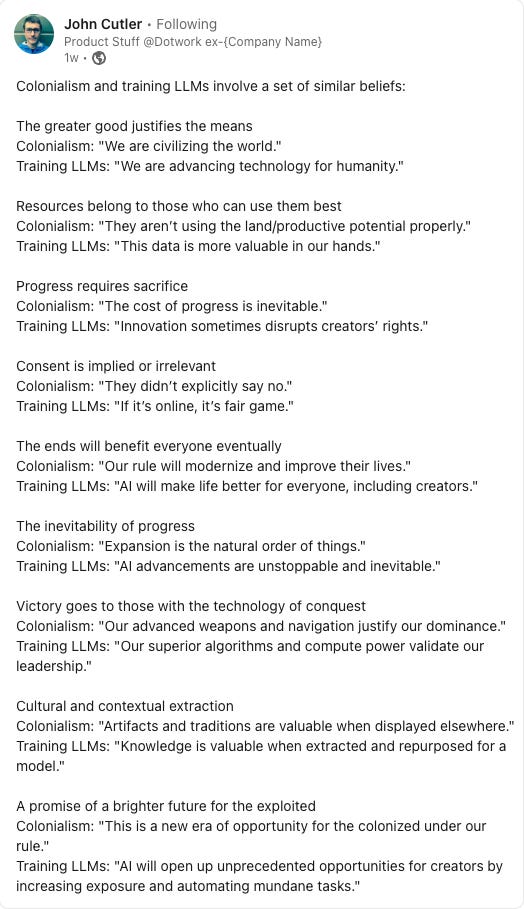

Here’s a great post from John Cutler about attitudes around LLMs/GenAI. The reason we learn from history is so that we can try really hard not to repeat it. Unfortunately, especially in the US, that’s in danger too. (All about book bans here.)

Thanks for reading Quality Technologist! Subscribe for free to receive new posts and support my work.