Is Your Software Testing Approach Flawed? Here’s the Right Way to Do It!

Get to know the industrial standards of software testing — helping you avoid the mistakes that you might be making during software testing.

I am on a unique journey in the realm of software testing, a path less traveled and certainly less defined. Unlike the well-established standards in software development, software testing lacks clear industrial benchmarks, largely due to the high demand for developers. When I joined my company as an intern, I was the lone QA engineer on the team, navigating an unstructured and evolving process. I didn’t know how to test or even what to test. I was the only QA engineer on the team, and whatever I did was called Testing 🙃

I kicked off by manually testing new additions and squashing bugs in the application. Jotted everything down in Google Sheets and later moved it to Confluence. With over 10 developers handling 14 internal products, my bandwidth was very limited due to all the manual testing. Once our critical internal apps got more stable, we decided to whip up an automated test suite for one of our key applications, the Retail Micro Service (RMS). Teaming up with 2 other developers and some testers, we managed to build a solid test suite that had more test cases than the unit tests in the app (which shouldn’t be according to industrial standards).

If you would like to know more about our badass test suite👉Click here👈.

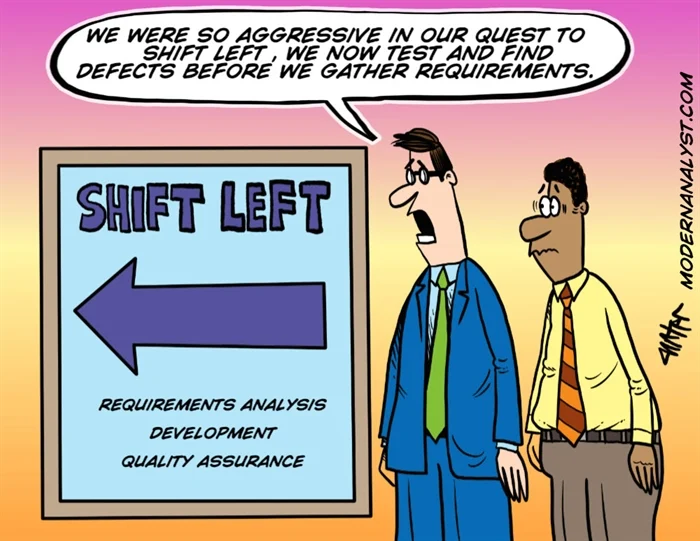

Any experienced tester would laugh at the process we were following. Testing was conducted at the far-right end (after development was completed) of the software development process, which led to the recognition of significant issues during the testing phase. These issues included:

- Adding unnecessary complexity to the application, making further development more challenging.

- Reducing the ability to make changes as the deadline approached.

- There was a lack of input from testers, which could have refined the proposed solution and helped determine a realistic due date.

How did we solve these issues?

Shifting left

As the first step, I was relieved from all manual testing and a large chunk of automation testing i.e., adding only cases that resonate with the actual user interactions with the application. I started to take part in the solutioning phase of any feature or architectural changes where I will be critically questioning the proposed solution making sure the solution is less error-prone. I will also know what the new version of the application will do so that I can add test cases parallelly while the developer is coding the solution. I can further make the test suite updated so that a lot of testing bandwidth is used along with the development phase leading to faster testing and release.

What wrong did we do?

Initially, the reliability of the test suite put more pressure on the testers to catch bugs rather than the unit tests and integration tests of the applications.

What did the old approach cost us?

- Long wait time for the test suite to run because the end-to-end tests are very slow to run.

- Rewriting/Overlapping of test cases between developers and testers which wasted time.

- QA availability for performance testing was limited.

Let me try to simplify different testings which are the industry standards that we are adopting:

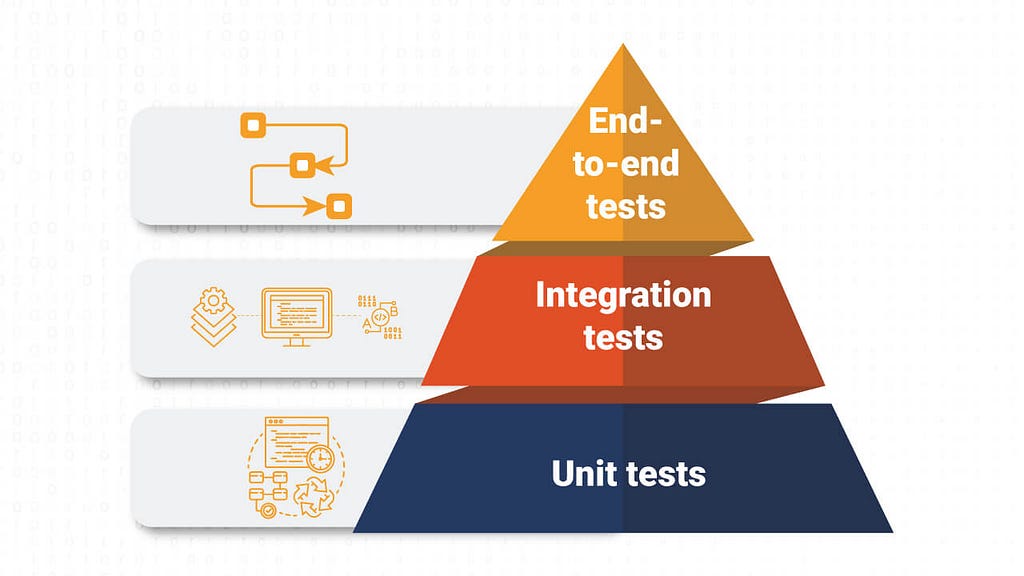

We are implementing the test pyramid by Mike Cohn. He introduced the Testing Pyramid in his 2009 book Succeeding with Agile: Software Development Using Scrum. There are 3 main types of tests stacked based on the number of test cases that have to be written.

Unit Test:

These tests are written by developers themselves, testing the new code at the time of writing. Unit tests ensure that the changes made in the application don’t affect the existing functionality. These tests often test single functions and also require very little time to run making them the most reliable form of test which ensures everything works fine every time new code is pushed or refactored.

Integration Test:

Even these tests are written by developers. These tests included mocking external dependencies such as databases, other micro-services, and third-party service applications. We will be mocking the responses from these applications and services to observe and check how each response is processed by the application.

End-to-End Test (E2E):

Testers come into play in this aspect of testing. We write tests that can be deployed in a test environment, using an actual database and separate mock services with sample responses that mimic real-world calls. Since these tests take time to execute, we need to ensure that we use fewer tests while still covering 90% of the actual real-world calls. These tests are generally very slow as they are a series of calls made to the application from making an order to creating a return order.

These tests generally catch errors like:

- Security configuration bugs in the application

- Duplicate entries in the database as it is mocked in the other 2 phases of testing

- Inconsistent or wrong values are stored in the database or transmitted to other micro-service or third-party applications.

- Backward compatibility issues as well can be spotted.

- A combination of different APIs may result in data changes which can be caught in E2E tests.

It is more important to ensure that the normal flow of the application works as intended. Prioritizing the main functionalities and user interactions helps in delivering a reliable product. On the other hand, focusing on finding bugs with a very low probability or low priority (causing minimal damage) does not add much value. It is crucial to allocate resources efficiently, emphasizing the stability and usability of the application rather than spending excessive time on edge cases that are unlikely to affect the overall user experience.

Though manual testing is important, automation is essential for testing applications. The confidence our automated test cases provide is unparalleled compared to manual testing.

Final Thoughts

There could be bottlenecks in tester bandwidth when most efforts go into manual testing or duplicating tests already done by the developer. We realized this in our company after 7 years! It might be happening in your company as well. Proper E2E automation with only the needed test cases can save significant time. Testers can then focus on performance testing, sharing their perspective in solution design, and contributing to CI/CD development, which provides higher ROI (Return on Investment). Additionally, having time to sharpen knowledge and build more robust testing.

Happy Testing!

Author: M M Kishore

Stay connected and stay informed with ProductPanda! If you’re a recent graduate who’s hungry for tech-knowledge, make sure to follow us 🎉 on our journey 🚀