Productivity Secrets: Using AI to Automate Tedious Tasks

One of the most important resources is time. If you can speed up a process, you should definitely do it to free up time for more creative tasks. AI can handle a wide variety of tasks, and I use it in many different areas, not just at work.

In this article, I want to show how I managed to speed up a task by 10 times using one extension. I work in web and mobile development, and we have a distributed team, so all our communication is online. We strive to take care of our employees’ development, and one aspect of this development is providing information about online events — conferences, webinars, etc., in which our employees can participate. Every quarter, I go through several websites, gather event information, and add it to our corporate Notion.

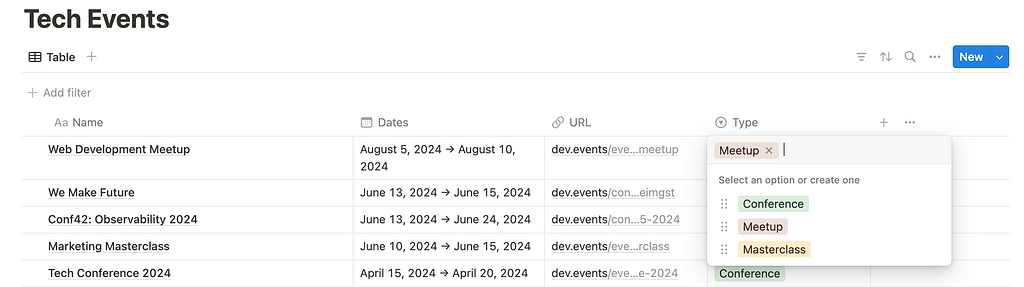

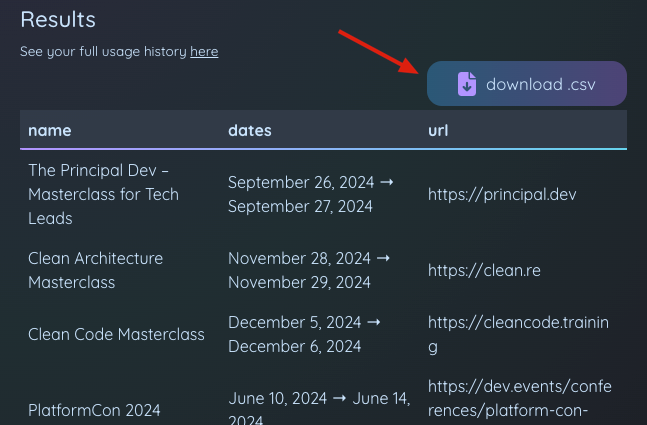

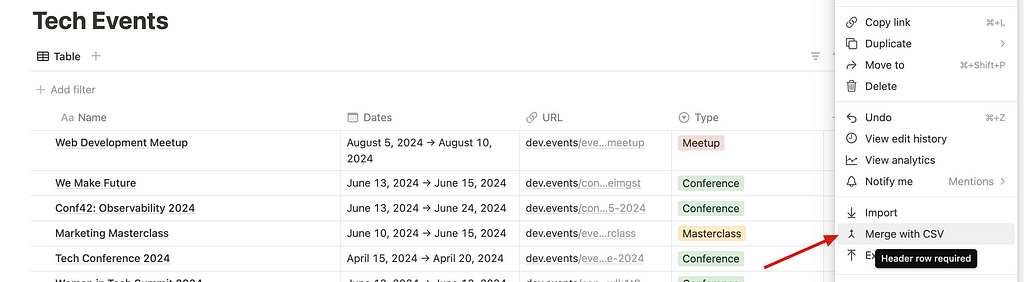

The data in Notion is a database — essentially a table with the event name, dates, link, and type of event (one from a list). Every quarter, I find about 50 different events on 5–6 websites. Can you imagine how much time it would take to manually copy and paste data for each event?

This is where AI comes to the rescue, specifically an extension for collecting structured data from web pages. By the way, I developed it myself as a side project. Check it out https://aiscraper.co/. So how can we collect structured data from any website in a minute?

Here are the steps:

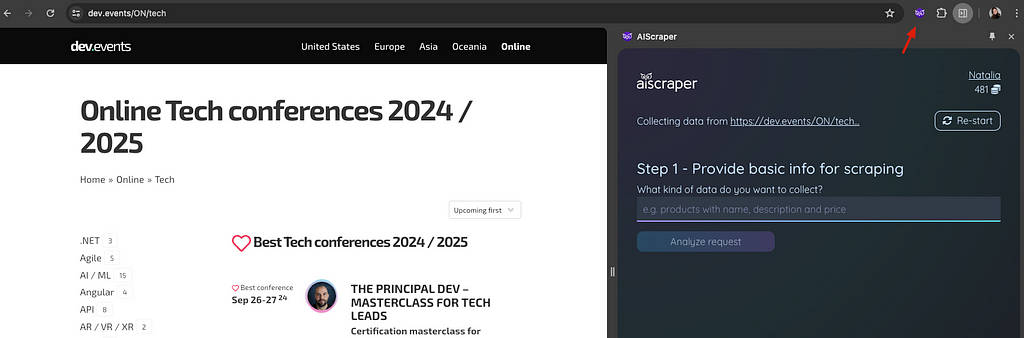

1. Open page for scraping and open extension

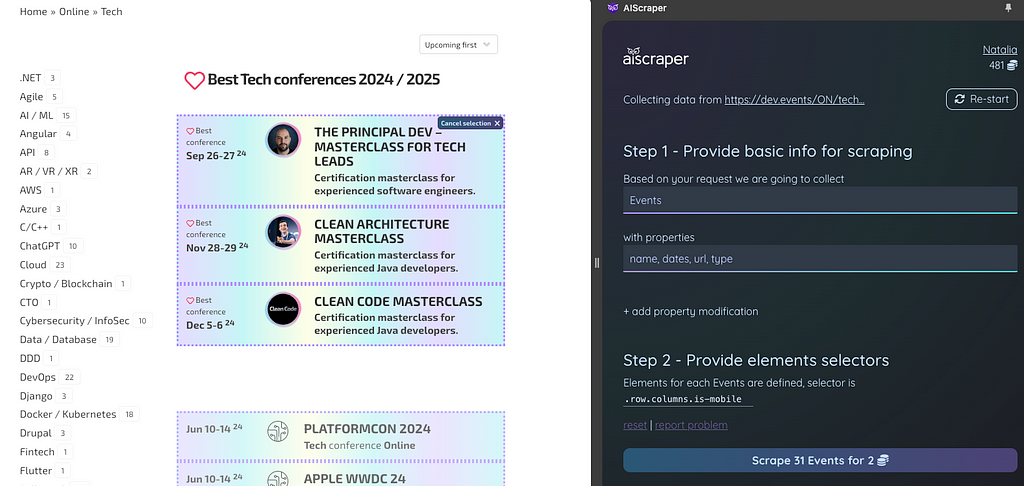

2. Specify what data you want to collect

3. Check the result of request analysis (scraper understands what kind of data you want and highlights these pieces of data on the page)

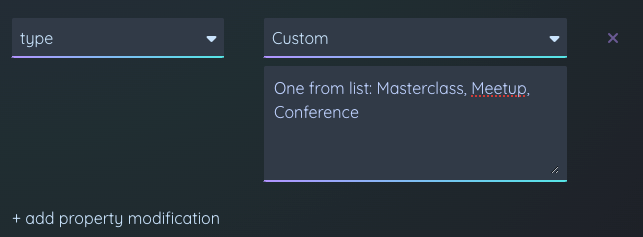

4. Add modifications:

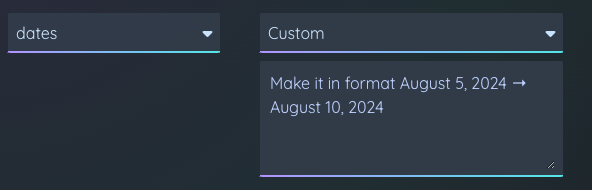

- Dates, so it is in a format that Notion understands.

- Event type, to clarify the expected values.

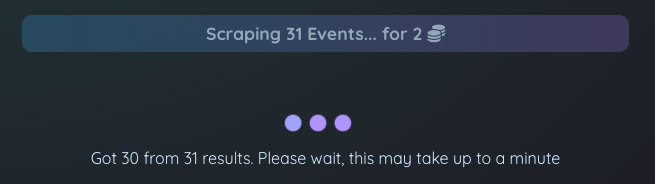

5. Run the scraping.

6. Download the CSV.

7. Import it into Notion.

8. Repeat for the other websites.

As a result, collecting data from each site takes no more than a minute, and we end up with a ready-to-share digest of online events for our colleagues.

I recorded a video of this process so you can see that it requires almost no effort (the video shows a previous version of the scraper, and the process is even simpler now)

Share in the comments if you have tasks that an AI scraper could help with!