AI is coming for your job … sort of

AI is coming for your job … sort of

It’s time to stop being afraid of the unknown and embrace the change

Hola Friends,

I was meaning to post this some two weeks ago, based on a reddit post and fortuitous conversation, but instead wrote up a very spicy piece. So let’s kick this conversation into gear, shall we?

Some time back, a reddit user posted the question — How worried is QA about AI and Automation? and there were several answers that helped to alleviate OP’s idea that AI is going to replace the Quality Assurance Analyst/Engineer. First, let’s discuss exactly what a “GPT” even is and why Artificial Intelligence is a misnomer.

The Definition

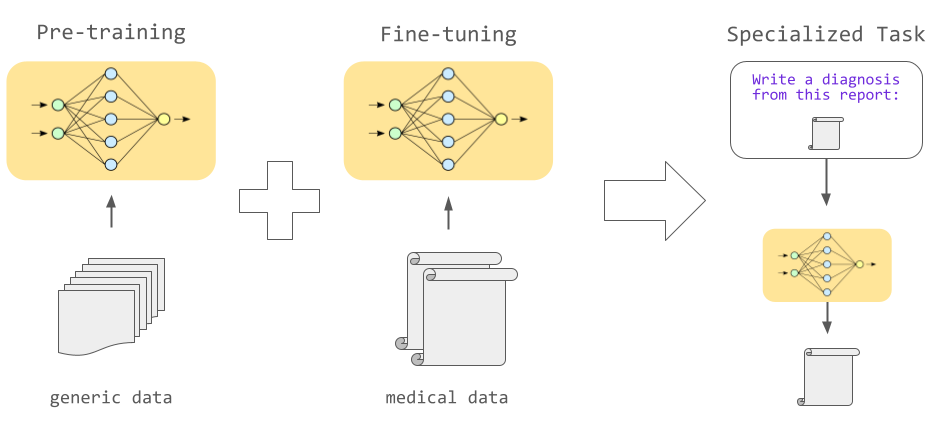

The “GPT” in ChatGPT is short for generative pre-trained transformer. In the field of AI, training refers to the process of teaching a computer system to recognize patterns and make decisions based on input data[1]. You, the user, enter a statement (prompt) and the result given is based on complex set of aggregated data synthesized to yield the best possible result.

Artificial Intelligence is the field of study in Computer Science that develops and studies intelligent machines[2]. In this article, Paul Whiteley — Emeritus Professor of Government at the University of Essex, explains why the term artificial intelligence is a misnomer.

A large language model (LLM) is a language model notable for its ability to achieve general-purpose language generation and understanding. LLMs acquire these abilities by learning statistical relationships from text documents during a computationally intensive self-supervised and semi-supervised training process.[3]

Here’s why I’m not worried, considering the STLC as a focal point for my opinion:

- Planning Phase — There is nothing about AI that can replace the collaborative espirite de core that goes into the exchange of ideas and creative discource for a feature to test. As QA, we are the voices that will add value to the planning effort by voicing what we know as a good user experience, expected UI for validation, and how to compose the best set of test scenarios.

Advantage — People

- Design Phase — If the recent Google Gemini incident is any indication of where we are in the AI maturity lifecycle, there is absolutely nothing to fear. Designers are the best possible resources to work with on effective interface design, color theory, best font for web and mobile, and workflows for optimal engagement. At best, AI will provide a “best guess” on what is trendy, but cannot possibly do better based on the lack of context.

Advantage — People

- Development Phase — If we’re applying a “shift left” approach to development test effort, aid in writing unit tests is where AI will be advantageous. QA and Devs will still have a hand in testing and finding bugs early, but at some point in the sprint, time (velocity) will be impacted by this feedback loop and having a prompt to compose common unit tests for a given input or feature will be advantagous. It will still be up to the Developer to employ the output of the prompt and keep what is valuable/relevant.

Advantage — Tied

- Testing Phase — For QA this is where our value lies. Our job during the previous phases is to be a voice representing the user/consumer. We have been at the planning meetings and added our input. We collaborated with our Dev team to ensure early feedback for work under development. Now we are tasked with executing the plan. AI can become an ally in providing test scenarios we might have considered. AI may be able to sufficiently draw up a test plan, a checklist, or even some metrics. Without proper context however, asking AI to test our work is no better than asking a friend, unfamiliar with the product or testing fundamentals. Still a useful ally to have when you need ideas. Github Copilot anyone!

Advantage — Tied

- Deployment Phase — I have seen attempts at leveraging the power of AI with Continuous Integration, but I’m not convinced that it can provide any more or less advantage than what is currently in use. Need more information.

Advantage — TBD

The conversation

As mentioned earlier, the second reason that served as inspiration for this very opinionated take came from a meeting I had with an entrepreneur. She presented the idea of leveraging machine learning and LLM in the testing process. Her ideas was to have a means of generating test artifacts based on prompts. Two main points against this idea were:

- Asking ChatGPT to draw up a test plan, or test scenarios at this stage of its maturity was like asking a five year-old to draw an airplane. You might get an approximation of a plan (wings, a body, etc.), but it will be a far cry from what you were expecting. Human intervention still required.

- Testing is a nuanced discipline requiring objectivity and context. We are like scientists challenging the stated criteria by running a battery of tests proving/disproving it. At this stage of maturity, ChatGPT is not at the point where it can know how to test for things like usability, edge cases, and other manner of exploratory testing. This might change in five years, but as it is right now, not a concern.

Conclusion — nothing to worry about

AI is not at the point in its maturity where we as Quality Assurance Analysts & Engineers need to be concerned.

AI will be coming for your job, but only in as much as it will do the mundane boring tasks freeing you up to do the important things. Not dissimilar to what automation testing does now.

Having AI as your ally is like having a calculator when you need to do complex math, or when you use Grammarly to help with your writing. Github Copilot can help coding but its up to you, the user, to make the code fit your work. And that is something AI just cannot do.

AI will help you find a great cake recipe, but it can’t bake the cake. Same goes for testing. It will help you generate scenarios and write code, but it can’t do much else.

That’s my take. I’d love your feedback if you feel otherwise.

Until next time,

ciao for now

- https://www.coursera.org/articles/chatgpt

- https://en.wikipedia.org/wiki/Artificial_intelligence

- “Better Language Models and Their Implications”. OpenAI. 14 February 2019. Archived from the original on 19 December 2020. Retrieved 25 August 2019.